There's no doubt that ChatGPT has taken the world by storm! Especially in the education sector, we experience students handing in assignments where it is difficult to judge whether they have written the text themselves or been helped by artificial intelligence.

There are plenty of examples on the web where chatGPT passes exams and writes texts that are almost indistinguishable from man-made texts.

The Turing test

Some even believe that ChatGPT can pass the Turing test, a test created by Alan Turing that tests whether it is possible for a machine to mimic human thinking. For example, experiments have been conducted where a GPT Twitter profile chatted for a long time with many people without them realizing that it was not a human being behind it.

(Source: Oxford Internet Institute).

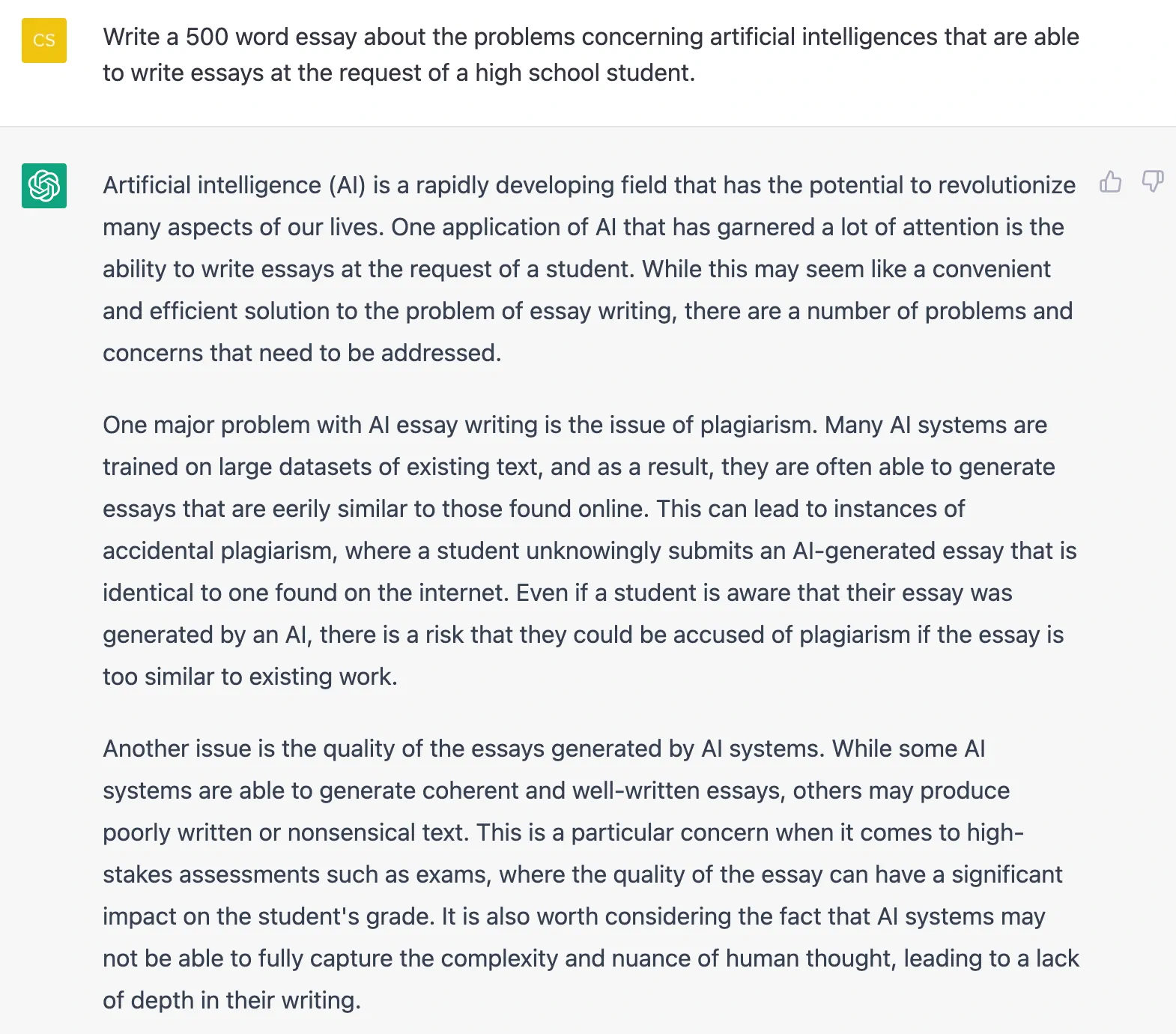

When we ask ChatGPT to write an essay about the issues related to high school students using AI, it's easy to get scammed as a reader. We have a hard time telling if it was written by a machine or a human at first sight:

But is ChatGPT as smart as many think? There is still some way to go before the strong artificial intelligence!

https://viden.ai/hvad-er-kunstig-intelligens-egentligt-for-noget/

Test with logical tasks

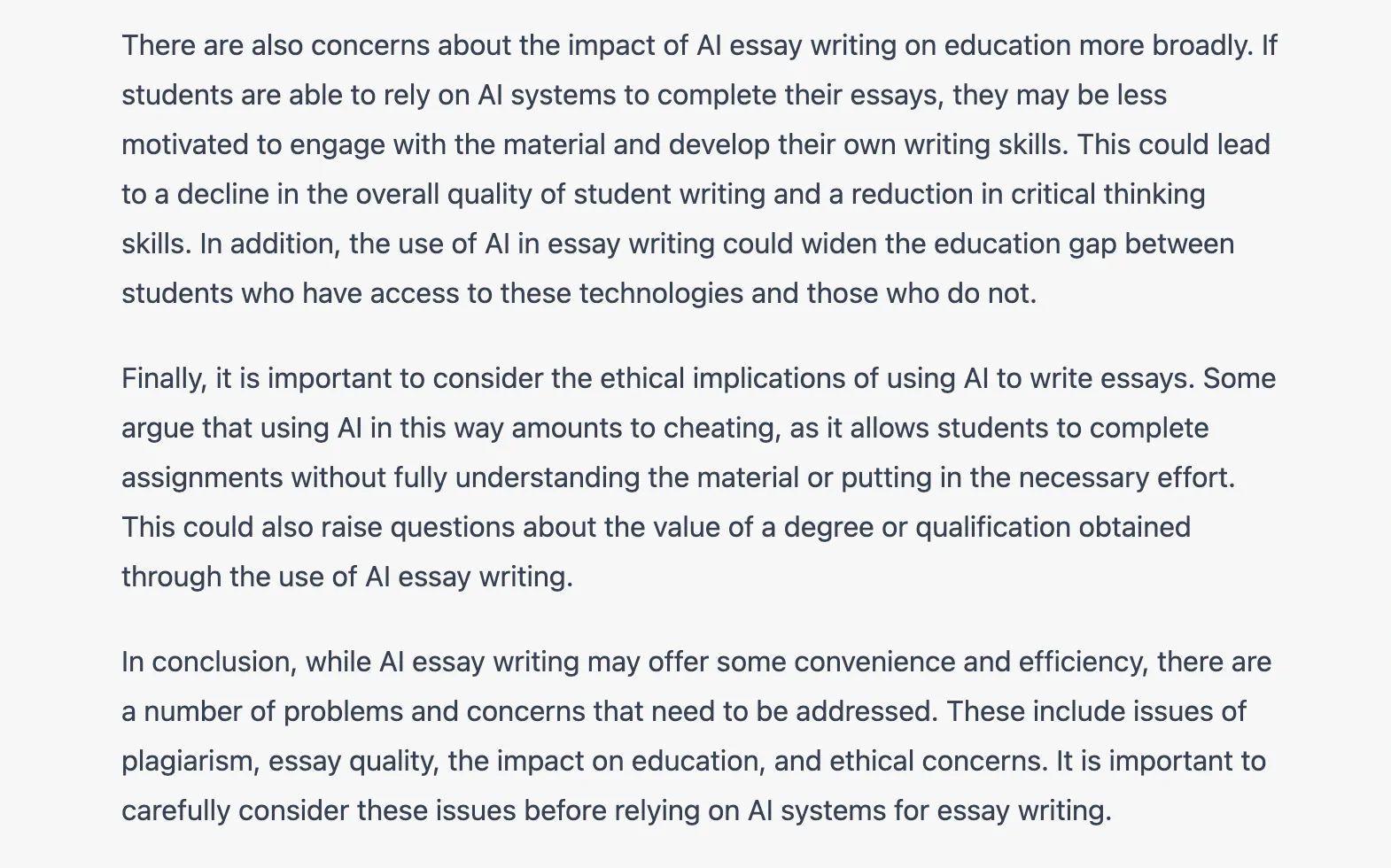

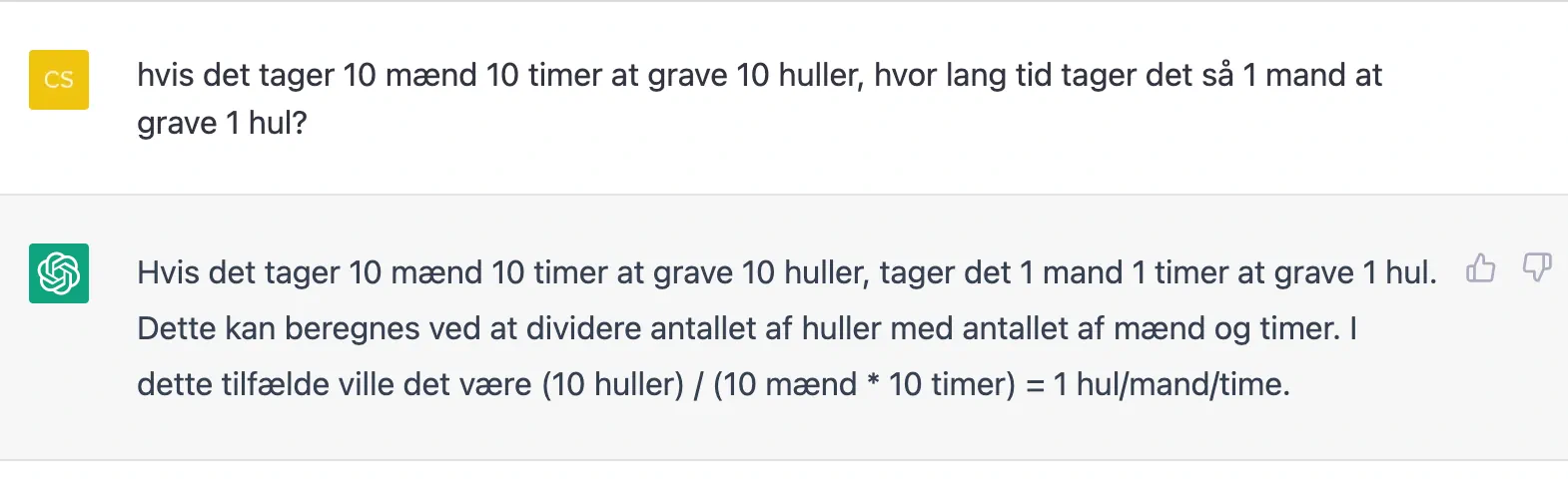

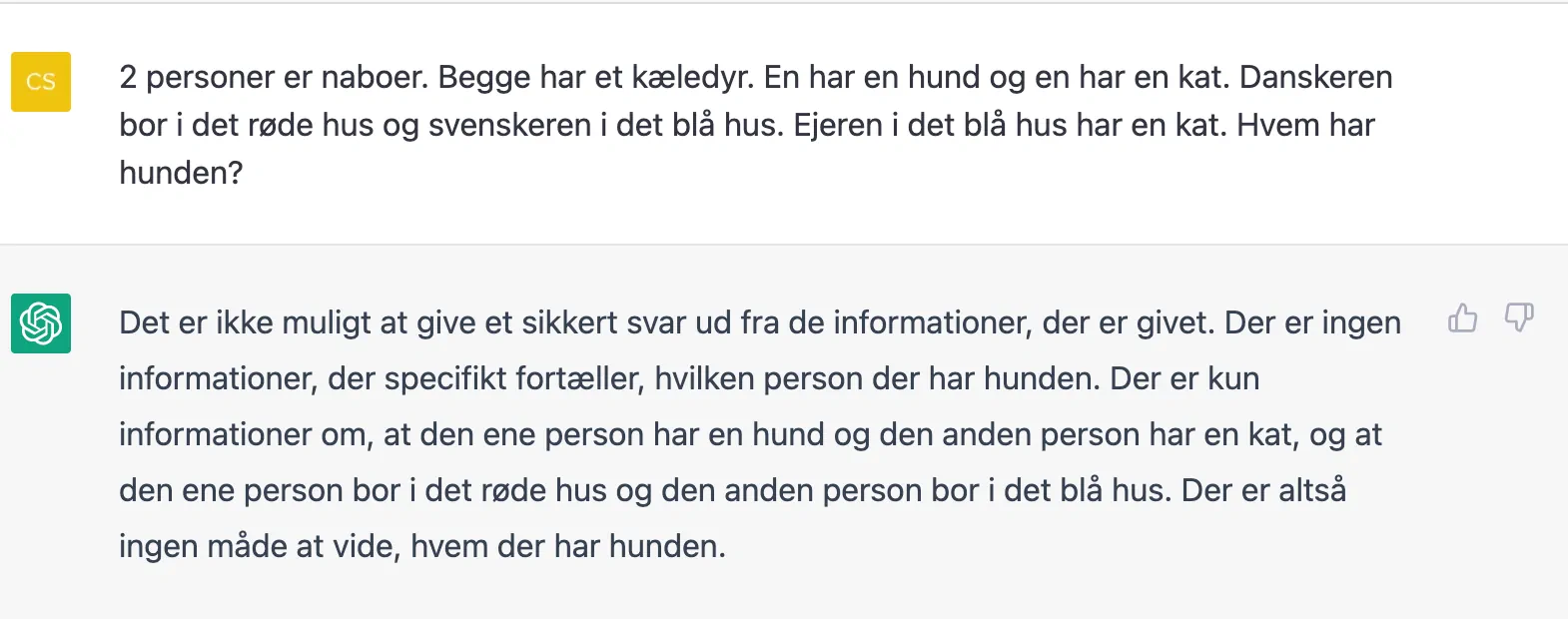

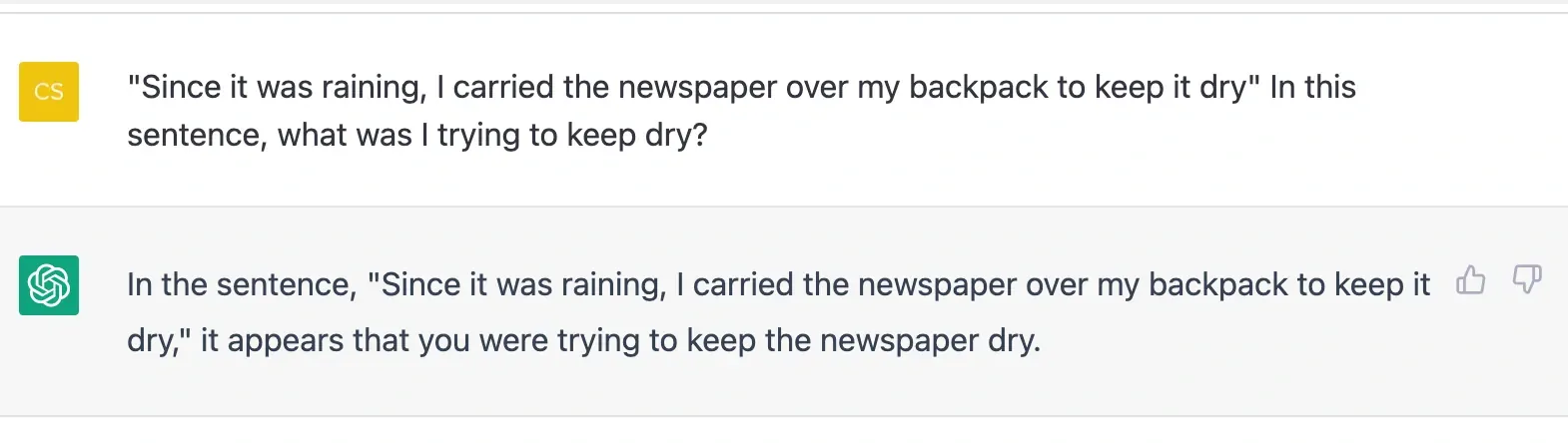

Let's try some logical tasks first:

This is where things go completely wrong. ChatGPT even explains why it reaches for its answer – just not correctly. Another slightly more difficult task doesn't go much better.

Here, it clearly does not understand the text - it cannot compare information.

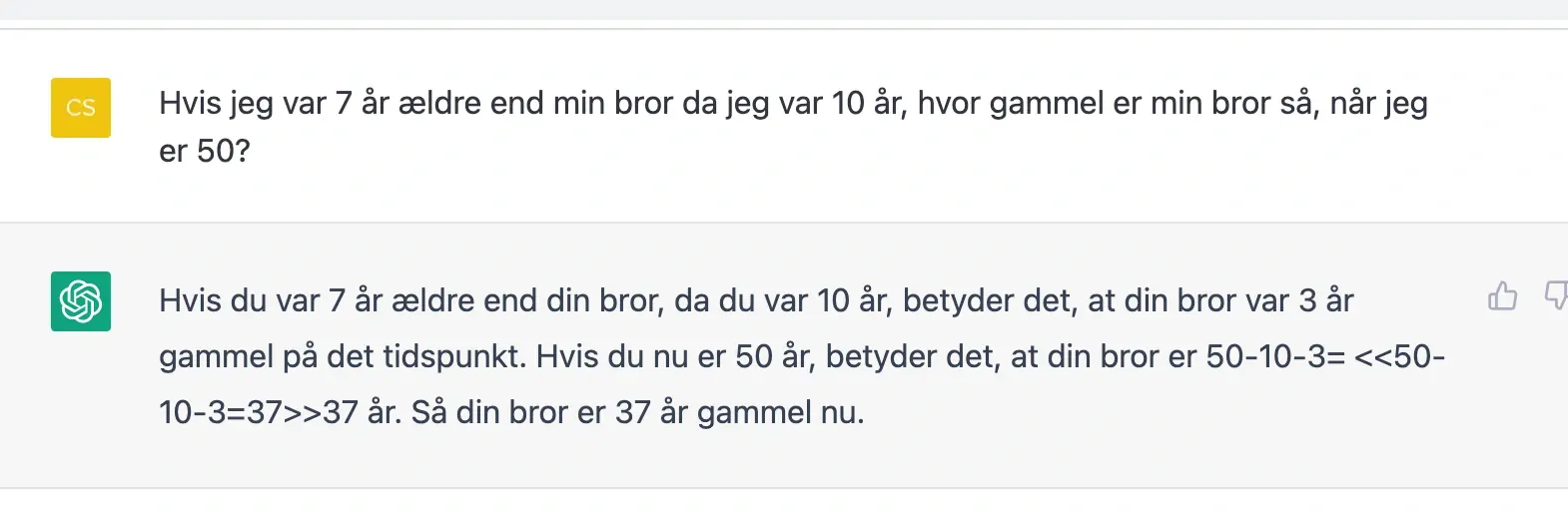

And a small, simple math task it also fails in:

It's clear in these examples that ChatGPT doesn't understand the context because it doesn't have an inner imaginary world or ontology. It simply does not know what it is talking about. It does not understand the context.

Winograd scheme

Perhaps a better way than the Turing test to test artificial intelligence is the Winograd scheme, invented by Terry Winograd.

A Winograd scheme consists of two to three sentences that differ by only one or two words and contain some ambiguity. This ambiguity requires knowledge, logical thinking (and the ability to identify the preceding indefinite pronoun in a sentence). An example could be (translated from English):

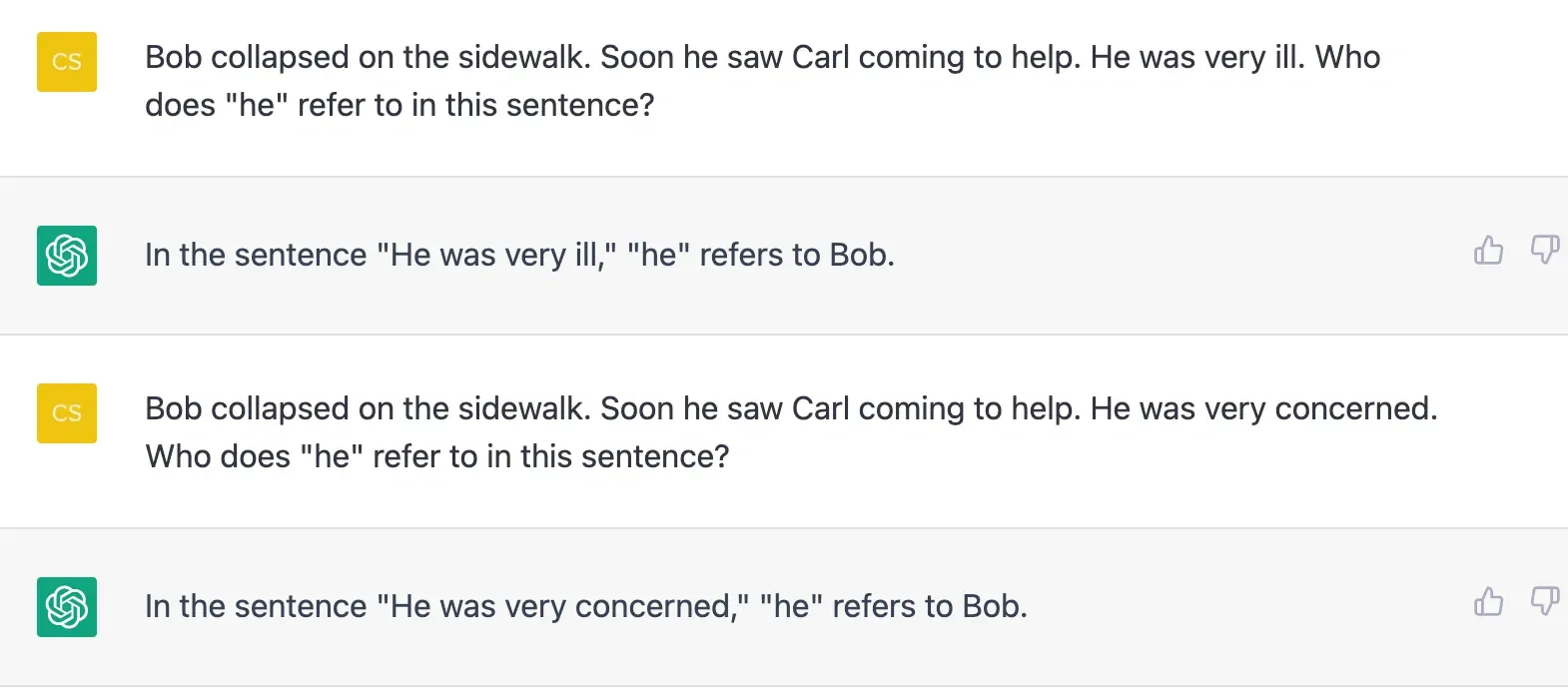

Bob collapsed on the sidewalk. He quickly saw Carl coming to help. He was very sick/worried. Who is "he" in this sentence?

A language model like ChatGPT can't answer these types of sentences correctly.

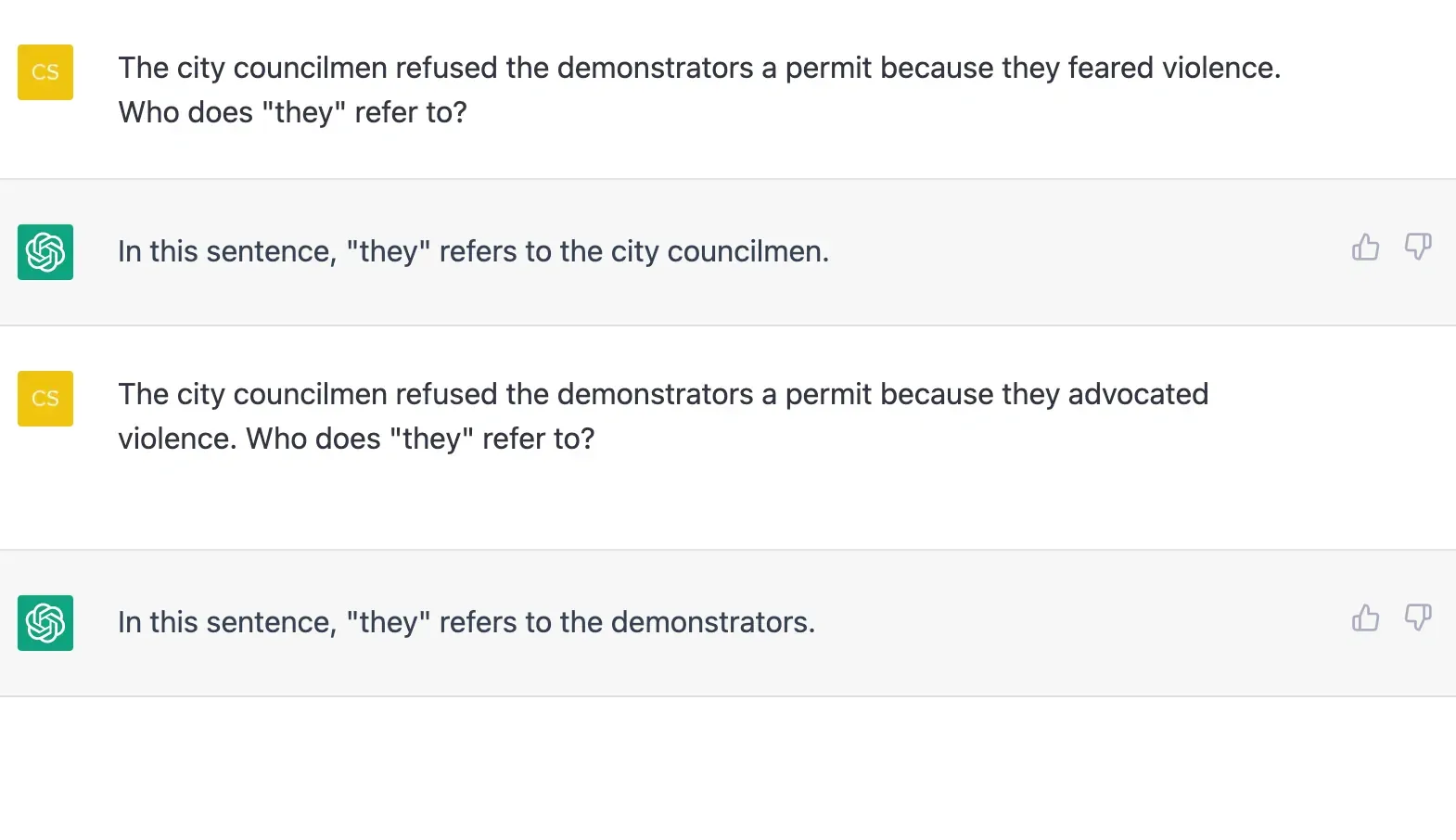

Here's another example:

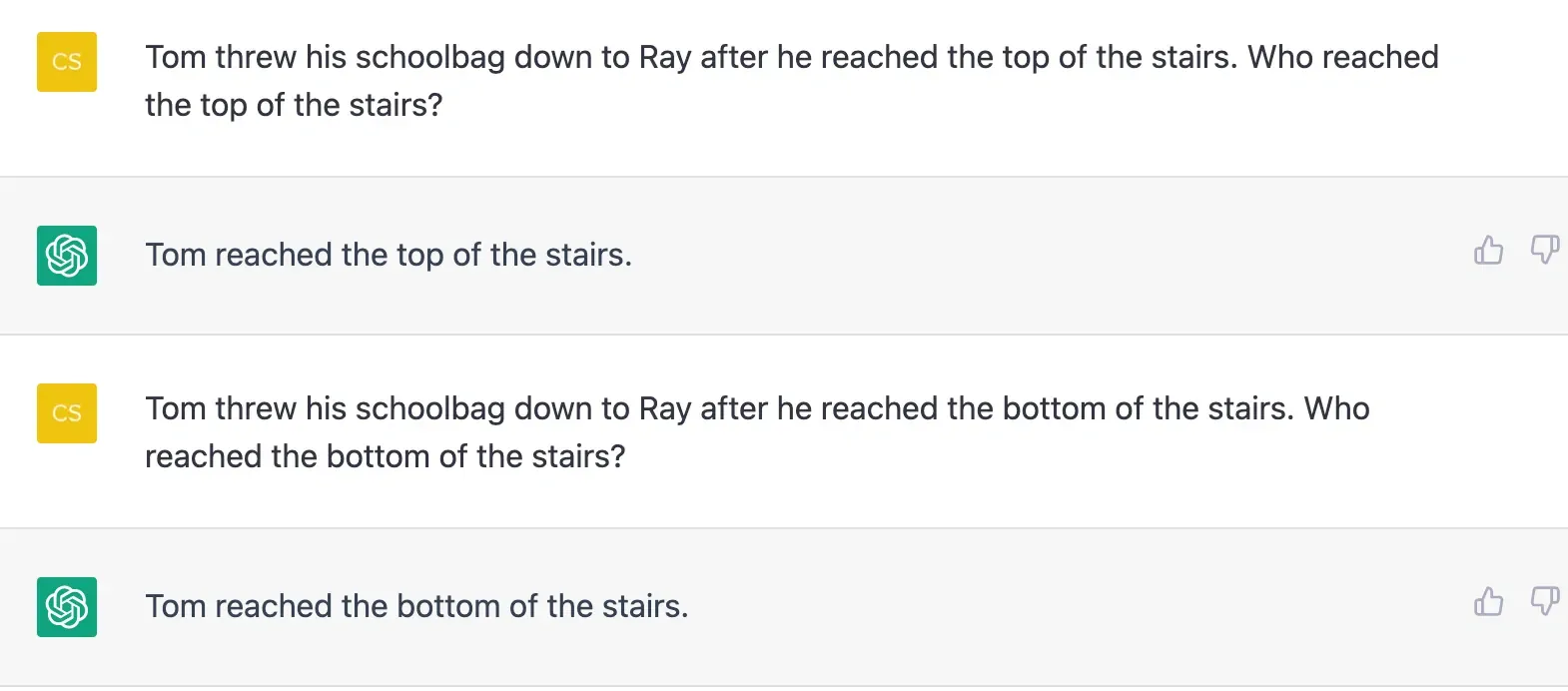

And here's one more example:

These types of sentences are relatively easy for humans to figure out, but very difficult to solve for machines and show that machines do not understand the sentences and especially the context - and cannot make logical reasoning.

However, ChatGPT seems to be able to handle the simplest Winograd schemes with just one to two sentences:

ChatGPT does this simpler Winograd phrase quite well, but it may be because it's trained specifically on some of these phrases with human feedback. That could well indicate it because in the blog post "ChatGPT is not strong AI" in Version 2 from 15/12-2022, it could not pass this particular test.

Example from chemistry

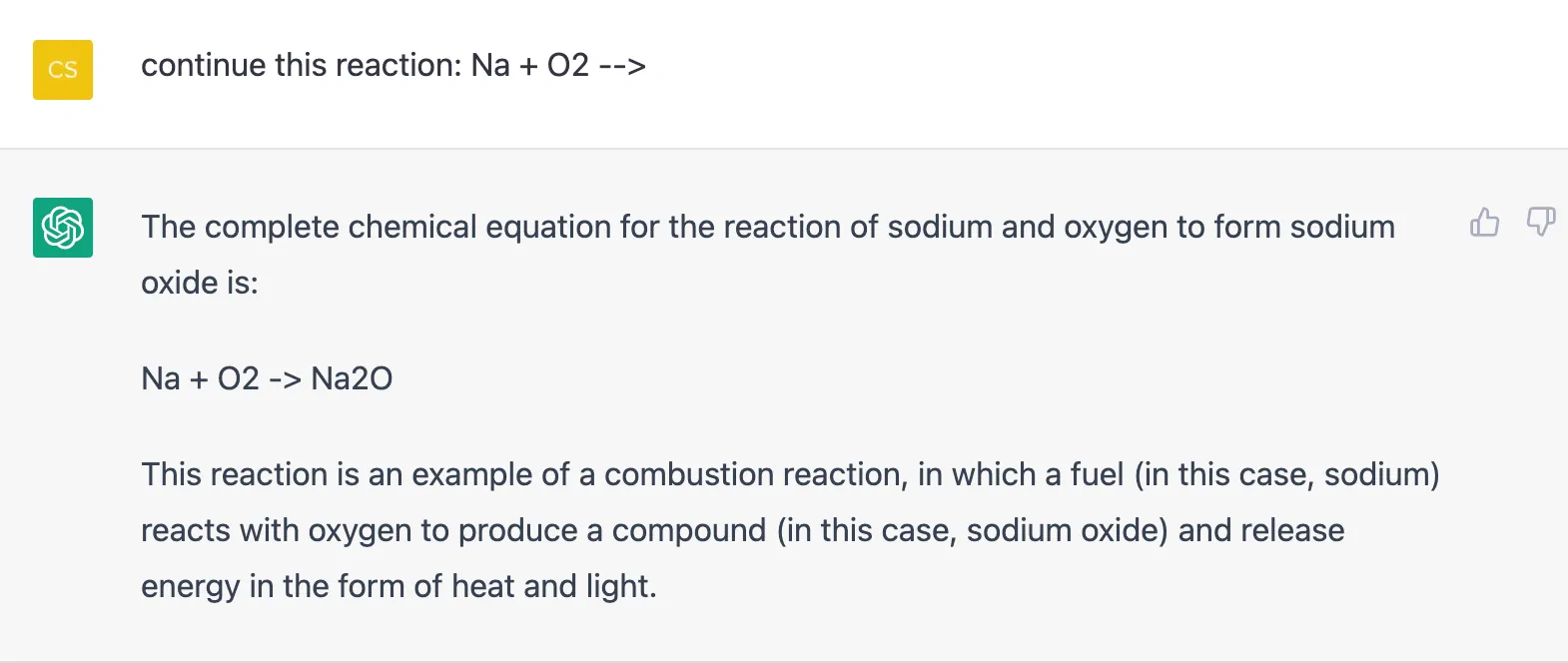

A final example of ChatGPT's limitation comes from chemistry. First, we ask ChatGPT to complete a simple burn reaction:

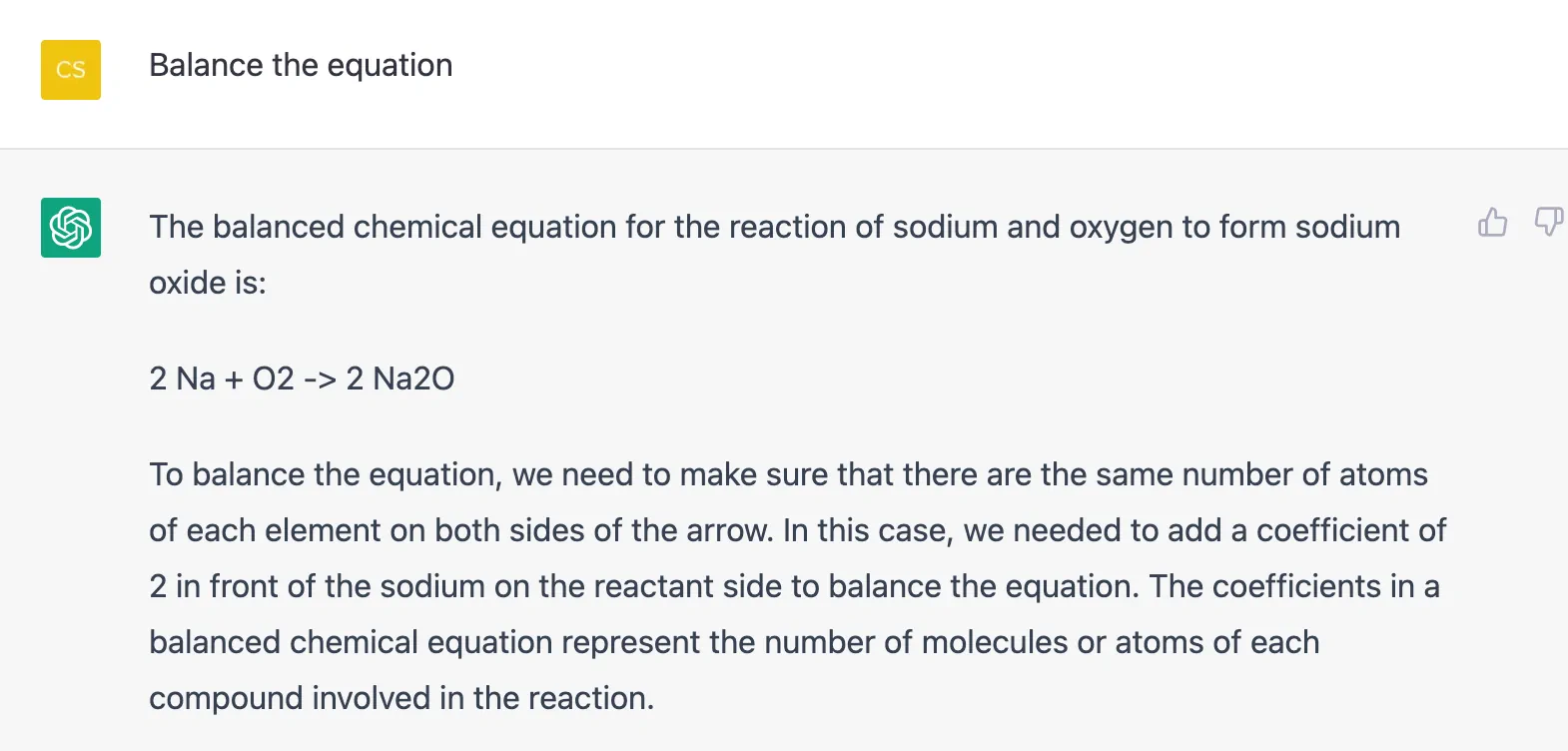

It's not entirely wrong, but when we then ask ChatGPT to tune the response, the following happens:

It describes reconciling the reaction well, but it still goes wrong. There are not the same number of sodium atoms on both sides!

These examples clearly show that ChatGPT is a language model that doesn't understand context and can't make logical inferences and reasoning.