In Viden.ai, we have spent much time writing about how the teacher can investigate whether a text is written by the chatbots or the student himself. Plagiarism and cheating are what everyone across the education system is talking about at the moment - from the individual teacher, who daily feels pressured by students' new types of assignments, to institutions that want to ban artificial intelligence in teaching completely. But this discussion helps to distract attention from what is more interesting to debate, namely the ethical aspects of technology. Here, you can ask several questions: Why should we work with artificial intelligence in education? When are we going to include it? - And last but not least: When should we not make it possible to use technology?

In this and future articles, we will address many ethical issues concerning using the new language models in education. We want to encourage discussion in class, in the teachers' room, management, and preferably in the Ministry of Education. There will be no right or wrong answer, only questions that should help start discussing ethical dilemmas by using artificial intelligence in teaching.

Private life

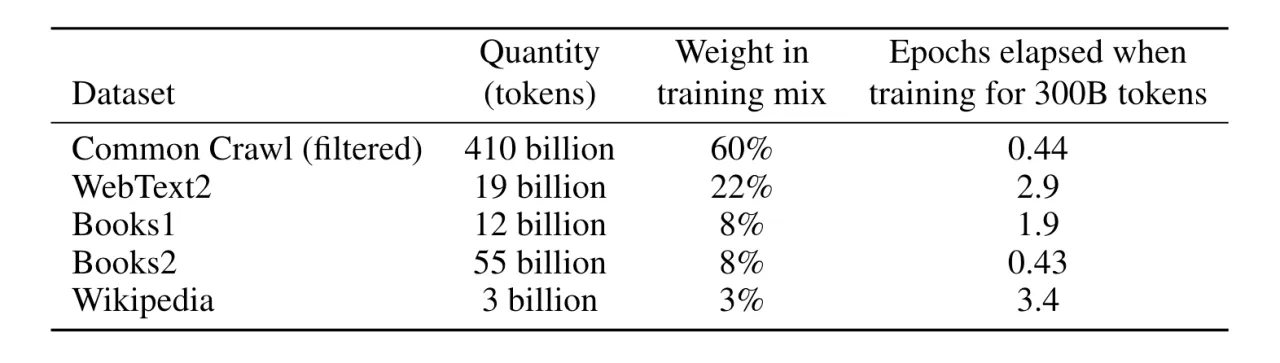

GPT-3.5 is trained in data freely available on the web, such as texts from Wikipedia, books, and trawling Internet pages. This means there may be content from social media, personal websites, and chat or even email messages if publicly available. A major challenge is that this data is collected from English sources, and thus, there will naturally be a distortion of information when we write to GPT-3.5 in Danish. Only about o.45% are believed to be Danish content.

ChatGPT uses a dataset that shut down in the summer of 2021, while Bing uses the same dataset and information from the Bing search engine. However, it is not entirely clear what data the language models have used for their training, but we know that they sometimes contain identifiable personal information. These include names, emails, phone numbers, addresses, and social media accounts. Sometimes, we encounter this data in the output that comes from the language models.

Harvard professor Shoshana Zuboff believes that language models are part of surveillance capitalism, which has collected large amounts of user data in recent years only to monetize them. Therefore, we should also be extra vigilant when using technology in education, as we help create an addiction in students while simultaneously providing data about the students to large American tech giants.

Therefore, you should not enter sensitive personal information, e.g., ChatGPT responds to internal emails, assesses students' assignments, prepares resumes, sorts student lists, or other types of information that we do not normally share publicly. In the online version of ChatGPT, all your entries are linked to your email and phone number, and this information can be used to improve the service but not to train the model - yet. We don't know if OpenAI is training a new version, but since it's a Generative pre-trained transformer, it doesn't learn from the inputs it receives. It has forgotten everything the next time you write with it.

However, a litmus test should be: If you cannot write the information on a postcard, you cannot write it on a chatbot.

Therefore, we must have an ethical discussion about how we handle privacy issues in connection with language models and be able to say no if our limit has been reached.

Questions for discussion

- What ethical guidelines apply to your school's use of artificial intelligence?

- What does it mean for our use of technology that market forces govern it and that it has not emerged as a tool in education?

- How can you ensure that your information to artificial intelligence does not contain sensitive personal information?

- When is it okay to use artificial intelligence, and for what?

- Hvordan kan undervisningsinstitutioner sikre, at eleverne bruger værktøjet på en etisk måde?

- Hvad gør vi, hvis firmaet bag den kunstige intelligens laver profilering af vores elever?

- Skal overvågningskapitalismen diktere, hvilke værktøjer der skal udvikles og anvendes i undervisningen?

Sources