We've already seen several examples of how ChatGPT can be used in education, but it's been exclusively through OpenAI's website. However, the challenge is that one has to write messages and wait for ChatGPT to respond constantly. Here, you have to interpret what is being written, write again, wait for an answer, interpret, write, etc. This approach is very user-friendly but can seem like a slow way to access artificial intelligence.

But what if you could get generative AI to work on the answer it has come up with independently? In generative AI, this method is called self-prompting or auto-prompting. The method involves the user writing a goal, after which the language model makes a strategy and prioritizes how it solves the goal. Here, it writes and executes prompts, saves the response, and uses the collected data for new prompts - i.e., many loops.

These loops can consist of everything ChatGPT can do, e.g., web searches, summarizing texts, writing code, translating texts, etc. And every time it loops, the language model "thinks" about new courses of action.

When I write "thinking" in quotation marks, it's because generative AI can't think like a human but tries to find different strategies to solve the task based on its code.

Let's stop putting human attributes on generative AI because there's no thinking, or what's called AGI. AGI stands for "Artificial General Intelligence" and refers to a level of artificial intelligence that can perform a wide range of cognitive tasks at the same level as a human. AGI is specialized in a single task or area and can adapt to different tasks and circumstances and learn generally, just like humans do. So we are not there yet.

This means you can activate the generic AI agent and let it run on autopilot while it tries to meet the goals. This means that AutoGPT can generate its ideas and suggestions based on the goals set by the user.

In this table, I've listed the difference between AutoGPT and ChatGPT:

| AutoGPT | ChatGPT |

|---|---|

| Chain prompting | Simple promting |

| Autonomous actions | User-driven input |

| Asynchronous | Synchronous |

| Data-driven strategies | User-driven strategies |

| Can run without user involvement | Requires a high degree of user involvement |

| Guided through goals | Guided through natural language |

Four websites where you can test AutoGPT yourself

Below I briefly review AgentGPT, Cognysys and GodMode. Common to the three options is using your API key from OpenAI (if you have paid for ChatGPT). If you don't have a key, use this link: https://platform.openai.com/account/api-keys. Thus, all the tools will also be much better, but be aware that it costs a lot of tokens to let artificial intelligence run in loops.

No programming skills are required to use the first three tools, and they're pretty easy to use (AutoGPT is hard to get started with). All websites draw on OpenAI's API and make use of GPT-3.5. If you have a developer license, you can use GPT-4. But beware, because it can get expensive.

You get the best results if you write in English.

1. AgentGPT

AgentGPT is one of the first editions of AutoGPT with an interface. Here, it is possible to create autonomous AI agents that must pursue a goal, and along the way, it comes with new tasks that are performed, and it uses the results in the next loop.

The website works pretty well for English texts, and there aren't that many setting options. For example, you can only set one goal it should try to solve.

AgentGPT is open source, and you can download it yourself via GitHub.

2. CognysysAI

CognysysAI provides a little more options for setting the agent, and here you can set up to three goals for the agent.

CognysysAI is a proprietary software, and it states that it is not based on AgentGPT or BabyAGI.

The agent can be tested without an API key for OpenAI.

3. GodMode

GodMode's expression is very similar to ChatGPT; here, you just give it a task, and it will try to solve it. There are not so many setting options in the program, and it is very simple to use.

GodMode is also a proprietary software, so we don't know what's behind the code and how it retrieves the information.

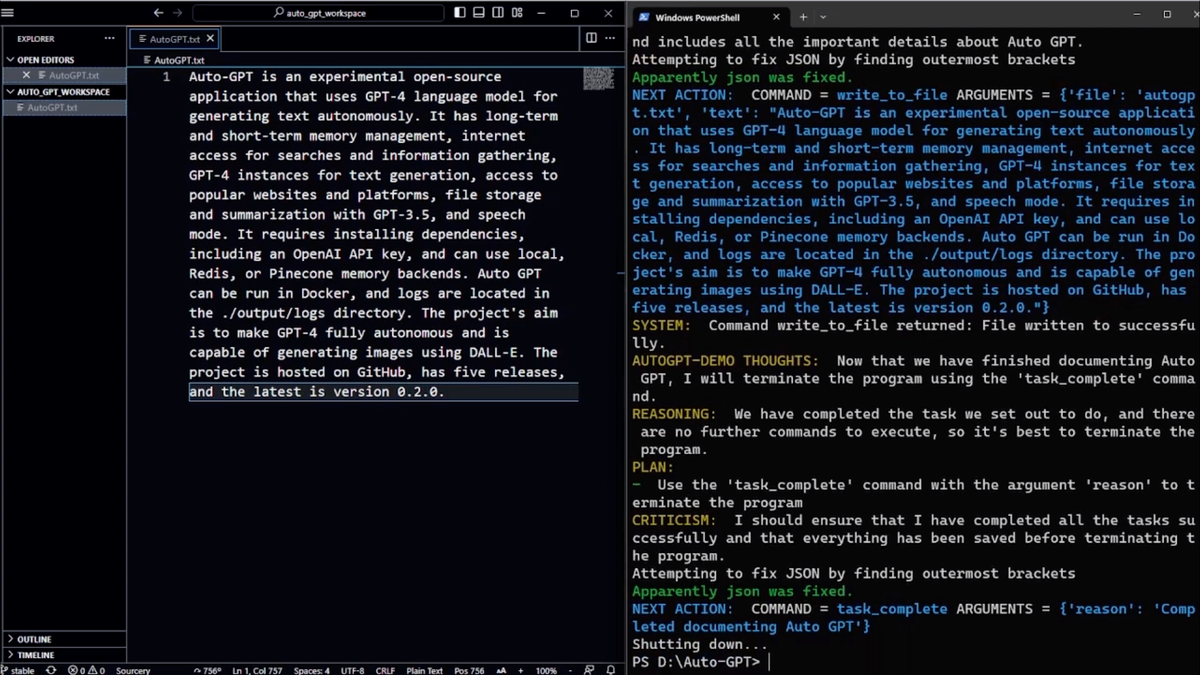

4. AutoGPT

If you want to run things on your terminal, you should try AutoGPT, which is behind the three tools above. It is possible to set up to five goals here, but it takes a little more work to succeed.

What can we use the AI agents for in education?

Incorporating automated processes into teaching can be daunting, but it can provide several opportunities that otherwise take a long time to carry out yourself. We must constantly consider that the AI agents can run asynchronously while we can run several.

Below is an example of how to use it to do market research. At the bottom of the article, I have put several sources, and most have a number of examples.

Market research

Doing market research can be demanding, as you have to research many websites and compare their products. In this assignment, we pretend to be a fake shoe company that wants to investigate the market for waterproof shoes. Whenever you can define a goal you want to investigate, you can let it review scientific articles (if they are available online), websites, news, or texts.

To solve this task, I made two goals (you can make up to 5 goals in AutoGPT).

1st goal: Do market research on waterproof shoes and their prices.

2nd goal: Find the five biggest competitors and give me a report on the pros and cons of the shoes.

Below is the result after about 10 minutes of using AutoGPT.

Pros: flexible sole, bungee lacing system, includes recycled materials

Cons: not suitable for long runs, not very breathable

Allbirds Wool Runners - $160.34

Pros: good for cold weather, comfortable for short walks and standing

Cons: lack of upper support, not suitable for long-term use

Cloudflyer Waterproof - $179.99

Pros: 100% waterproof, lightweight cushioning, Helion super foam

Cons: wider outsole, not very breathable

Salomon Speedcross 5 GTX - $140

Pros: Gore-Tex waterproof membrane, Contagrip outsole, Quicklace system

Cons: not very breathable, not suitable for road running

Brooks Ghost 13 GTX - $160

Pros: Gore-Tex waterproof membrane, BioMoGo DNA cushioning, Segmented Crash Pad

Cons: not very breathable, not suitable for trail running

The example is quite simple, but it gives a good picture of what AutoGPT can be used for.

Challenges of the intelligent standalone AI agents

In one of my tests of AutoGPT, I asked it to identify ten Danish researchers who work with artificial intelligence in education. Then, for each person it had found contact information, it had to examine which scientific articles the person had written. Finally, I asked them to summarize everything and write an email showing interest in the person's workspace.

The above resulted in me having ten unique emails to the ten researchers. What I didn't expect, however, was that since I had asked AutoGPT to write an email, it also presumed that I wanted to send it. AutoGPT then began writing an email function in Phyton code, which the program subsequently wanted to execute.

Stop here and think about it...

Artificial intelligence only writes code in a system I use, and I have no idea how the underlying code works. It thus also has the opportunity to feed itself with new codes in a series of endless loops based on a goal and with a secret set of rules as a guideline. What if there are errors in the rulebook? What if I had asked it to craft and send phishing emails to the above researchers?

What is interesting and disturbing about the above is that the program constantly makes new proposals about what should be done next. This also includes the ability to write code and execute it. Here, one must be careful because although the above is an innocent example and quite easy to understand, what happens when we cannot figure out the code being written? And can we always assume that people using artificial intelligence will also do?

"It is hard to see how you can prevent the bad actors from using it for bad things", Geoffrey Hinton

According to Geoffrey Hinton, AutoGPT contributes to a direction where artificial intelligence works autonomously, and users allow machines to learn by themselves. His major concern is that we might lose control because artificial intelligence becomes more intelligent than humans. At the same time, technology can be abused, and according to Hinton, this is something we will see a lot of shortly.

Sources