This article will briefly discuss AI regulation and what it might mean for education. Before we get that far, we want to clarify that we are not lawyers or experts in the field. We spend a long time writing our texts, but errors can creep in, and in this case, we are writing about legislation that has not yet been adopted. In addition, the AI Regulation itself is also constantly changing (by May 11, 2023), and this article is not updated every time changes are made.

National strategy for artificial intelligence

In 2019, Denmark examined the new opportunities and challenges with artificial intelligence in the National Strategy for Artificial Intelligence report.

It defines four main areas:

- Denmark must have a common ethical basis for artificial intelligence with people at the center.

- Danish researchers will research and develop artificial intelligence.

- Danish companies must achieve growth by developing and using artificial intelligence.

- The public sector must use artificial intelligence to offer world-class service.

The question, however, is how to ensure a sound use of artificial intelligence.

What is the AI Regulation?

The AI Regulation is a future EU regulation that will regulate artificial intelligence in all EU member states. (A regulation is a law directly applicable in all EU member states without prior incorporation into national laws.) The regulation was proposed on 21 April 2021, and negotiations on its content are still ongoing – although a final text moved closer on 11 May 2023, when the European Parliament's committees approved a draft.

On 14 June 2023, MEPs voted in favor of the proposal, and the regulation can now be negotiated with the Council of the EU and the European Commission (in so-called trilogue negotiations). A final adoption is underway in late 2023 or early 2024.

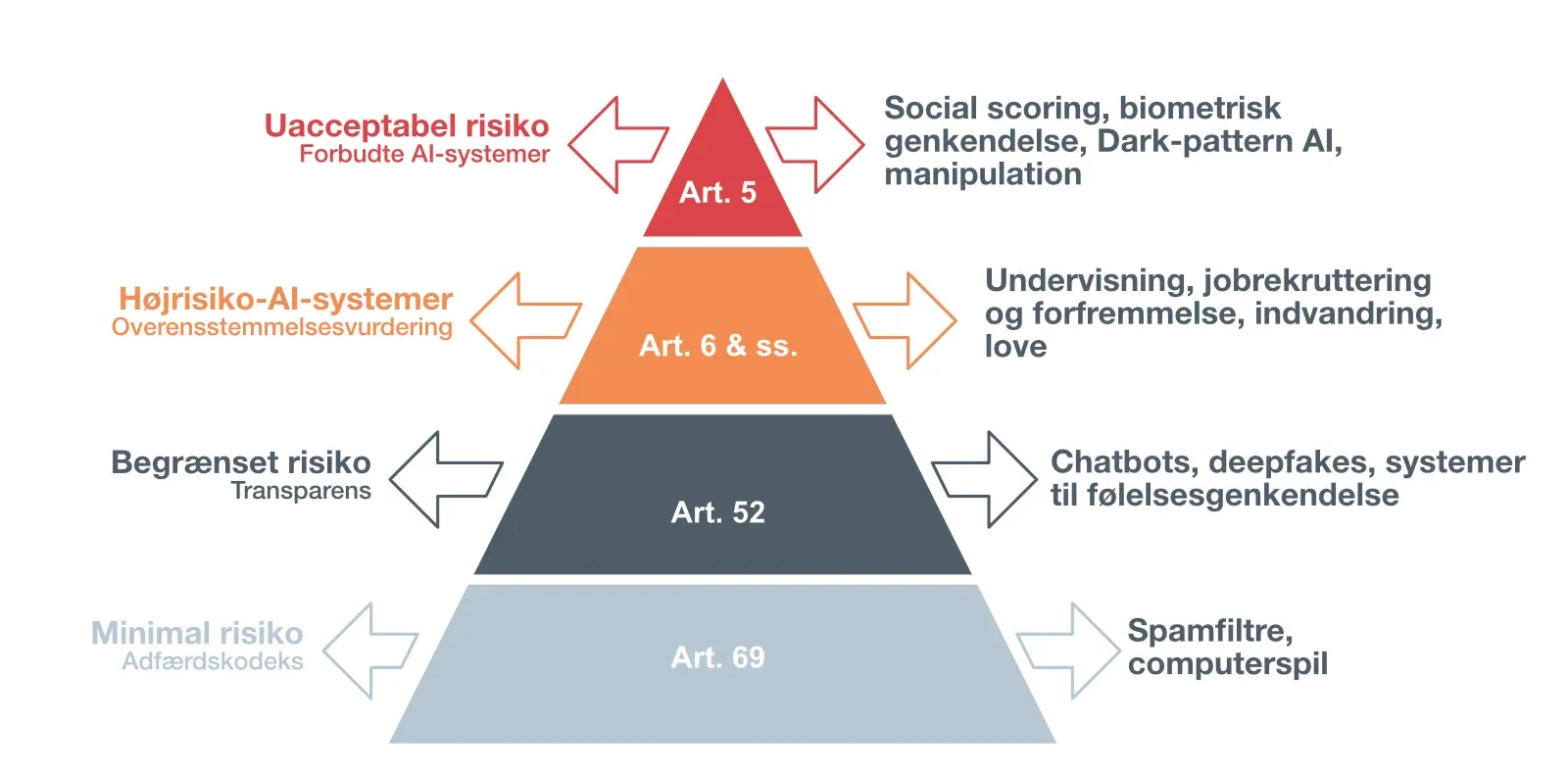

The regulation is designed as a risk-based system with four levels: unacceptable risk, high risk, limited risk, and minimal risk, and it currently consists of seven sections containing 85 articles and nine annexes. In this article, we will not go through the whole regulation but (almost) only look at the parts that we think will affect education.

The definition of an artificial intelligence system

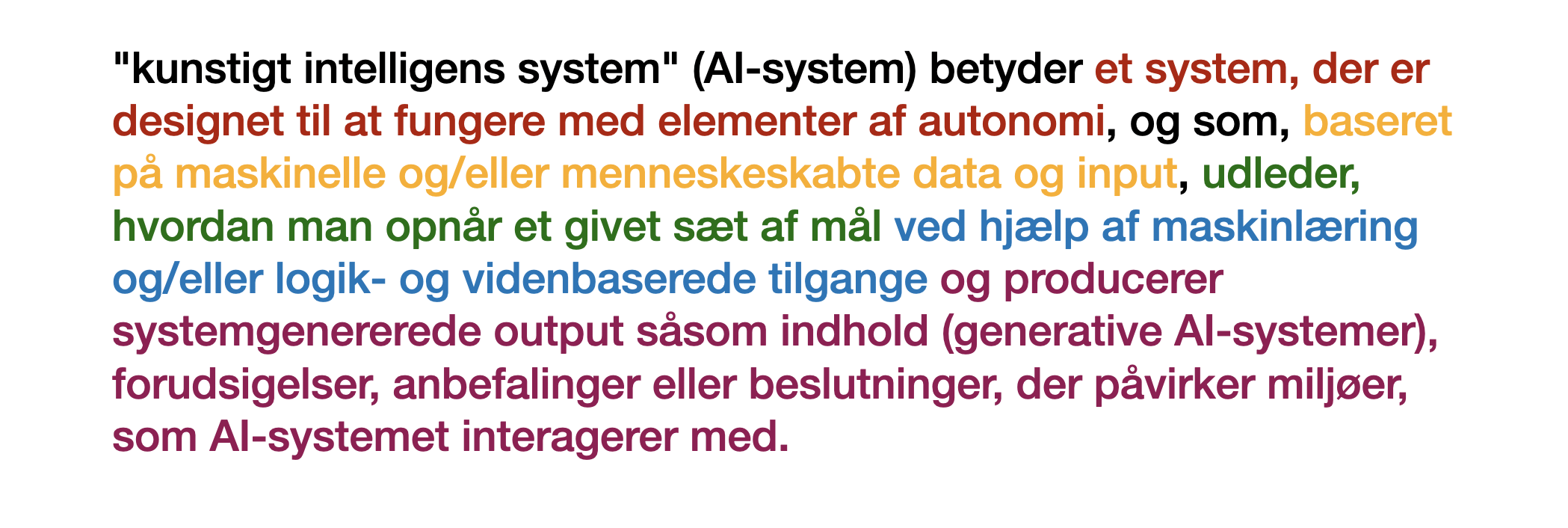

It is important to define what we understand by such a system to talk about the risks of artificial intelligence. The EU has defined an artificial intelligence system in the legislative text of the regulation as follows:

Since the regulation is written in English and only finally translated into different languages once it has been adopted, the English version of the definition is shown here to minimize uncertainty about the correctness of the translation. The original Commission proposal from 2021 is also available in Danish.

"Artificial intelligence system" (AI system) means a system that is designed to operate with elements of autonomy and that, based on machine and/or human-provided data and inputs, infers how to achieve a given set of objectives using machine learning and/or logic- and knowledge based approaches, and produces system-generated outputs such as content (generative AI systems), predictions, recommendations or decisions, influencing the environments with which the AI system interacts.

That's a pretty broad definition of artificial intelligence, and it will affect many systems.

Managing the risk of AI systems

The AI regulation is made as a risk-based system with four levels.

Prohibited AI systems

The highest level is prohibited AI systems, described in Article 5. In this category, systems are located there:

- Exploits human consciousness, such as distorting behavior or inflicting physical or psychological harm on the person.

- Exploits people's vulnerabilities due to age or physical/mental disabilities.

- Evaluation or classification of the person's credibility based on social behaviour or predicted personal characteristics or traits that lead to adverse or harmful treatment of the persons.

- Real-time remote biometric identification for law enforcement purposes (with exceptions).

- Indiscriminate collection of biometric data from social media or CCTV footage to create facial recognition databases.

- recognition of emotions in natural persons related to the following areas: law enforcement, border control, workplaces, and educational institutions;

- policing (based on profiling, location, or previous criminal behavior);

The last 3 points have been added on May 11, 2023, by an amendment.

High-risk AI systems

The next level is high-risk AI systems, described in Article 6 and Annex 3. Here, you will find a wide range of AI systems, such as systems for:

- critical infrastructure

- job recruitment and promotion;

- Credit ratings

- Life insurance

- Public social benefits and services

- certain AI systems for education

Appendix 3 states the following about education:

(a) AI systems intended to be used to determine access or assign natural persons to educational and vocational training institutions;

(b) AI systems intended to be used to assess students in educational and vocational training institutions and for assessing participants in tests commonly required for admission to educational institutions.

In a Danish translation of the proposed regulation, this is interpreted as follows:

AI systems used in education or training, in particular for the admission or allocation of persons to educational establishments or for the evaluation of persons during tests as part of, or as a prerequisite for, their training, should be considered high-risk as they can determine people's educational and professional life trajectories and thus affect their ability to secure livelihoods. If properly designed and applied, such systems can violate the right to education and the right not to be discriminated against, as well as perpetuating historical patterns of discrimination.

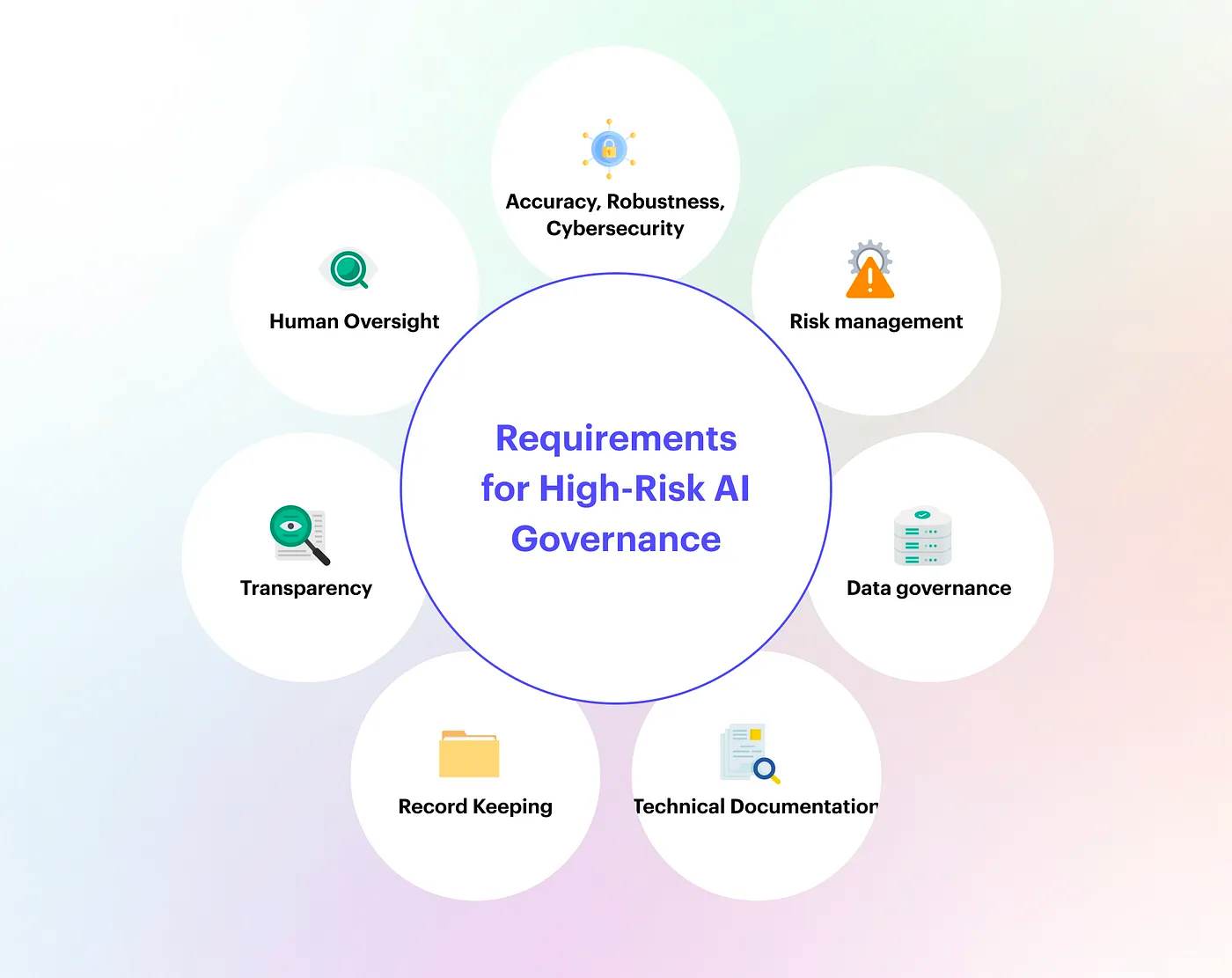

High-risk AI systems are subject to many strict requirements to be legal. This includes inter alia, requirements for risk management systems, testing of the system, data and data management, technical documentation, CE marking, registration of the system in the EU database, storage of logs, transparency and dissemination of information to the user, human oversight as well as accuracy, robustness, and cybersecurity. All these requirements are described in Articles 8 to 15 of the Regulation and place high demands on the providers of the systems. In addition, special attention must be paid to risk management if it is expected that high-risk AI systems will be used or have an "impact" on children under 18.

There are also requirements for users of high-risk systems. Users must:

- use the high-risk AI system by the instructions for use;

- ensure relevant input data appropriate to the purpose of the system;

- monitor the operation of the system based on the instructions for use;

- keep log files if they are under users' control;

- prepare a data protection impact assessment.

Limited risk

The third level of the risk system is a limited risk, where things like chatbots, deep fakes, and emotion recognition are mentioned. However, these systems are also subject to rules which are not as strict as the high-risk systems. For example, users must be aware that they are interacting with a machine, and systems that generate video, audio, or images resembling real objects (e.g., deep-fakes) must be informed that the content has been artificially generated or manipulated.

Minimal risk

The fourth level is minimal risk. There are things like spam filters and computer games, and this level is not so interesting when we talk about teaching. An example could be a computer game where you interact with AI-enriched people in action or levels generated by artificial intelligence. According to the AI Regulation, no requirements are introduced for systems within this risk level, but it is possible to join the rules voluntarily.

Foundations-models and general purpose systems

On 11 May, an interesting thing was added by an amendment, namely the definition of a 'foundations model':

An AI model trained on large-scale data is designed for versatility of outputs and can be adapted to a wide range of specific tasks.

According to the proposal, such a foundation model should be subject to the requirement that the basic data set be subject to appropriate measures that examine and possibly mitigate the suitability and possible bias of the data sources.

It is also wished to define a "general purpose system" which is:

AI systems that can be used in and adapted to a wide range of applications for which it was not intentionally and specifically intended.

That is AI systems that can be used in many different situations, even if they were not originally designed specifically for them. The amendment makes these "general purpose" AI systems subject to transparency requirements, and the provider of these systems must train, design, and develop the foundation model (which underlies the system) in such a way as to ensure sufficient safeguards against the generation of content that contravenes EU laws. Finally, these providers must document and publish a sufficiently detailed summary of the use of training data protected under copyright law.

Such systems will fall under ChatGPT, Google Bard, Midjourney, Dall-E, and other generative AI systems!

These additions led Sam Altman from OpenAI to state that they were ready to pull ChatGPT out of the EU if it could not meet all requirements. However, he has since backtracked a bit and now says that they will do what they can to comply with the demands from the EU.

The regulation applies to providers placed on the market or put into service AI systems in the EU, regardless of whether the same providers are established in the EU or a third country. For example, an American company cannot "just" set up a subsidiary in the EU to get away easier.

What will the AI regulation mean for using artificial intelligence in education?

When we want to use artificial intelligence in connection with teaching, we must, of course, comply with AI regulations and GDPR legislation. There are some requirements for us as users we must comply with if we use high-risk systems, but it is more the question of whether it will be possible to use such systems for teaching due to the demands placed on them.

High-risk AI systems in education

Here, we will mention some of the systems that will fall into high-risk systems.

- An AI system is used to determine or assign access to degree programs.

- Evaluation of people in a test

- Automatic assessment of students'

- predictions about which students will drop out

However, it will be possible to make such systems, but they will require the manufacturer to comply with many strict rules.

Example of a high-risk system

We have been allowed to pass on the example below by Professor, Dr. Jur., Henrik Palmer Olsen from the Faculty of Law, University of Copenhagen. The example has been used for the Djøf course: the AI regulation in practice. The starting point is a hypothetical example inspired by some Dutch universities, which, during the COVID-19 pandemic, imposed requirements for monitoring students in connection with home exams.

A large university in the metropolitan area has decided to implement a saving on exam expenses. The university will abolish the cost of exam rooms and exam guards. Instead, home exams are introduced in all subjects. To ensure that students do not cheat at the exam, all students are required to install a program on the PC they use for the exam.

The program monitors their user behavior through the built-in camera. The university has contracted with a company (We See You - WSU), which specializes in automated behavior analysis and which, based on facial and movement analysis as well as analysis of keyboard behavior, etc., assesses whether students are cheating. WSU's system generates a score for each student, which indicates the probability that they have cheated on the exam. This score is communicated to the assessor of the individual student's exam paper, and on this basis the assessor decides whether to approve or cancel the exam attempt (in which case the student must be re-examined). To what extent do the AI Act and GDPR apply to WSU's software, respectively, and what are the roles of WSU and universities, respectively, in relation to the two sets of rules?

There is a technical challenge before we dive into how the AI regulation categorizes the above example.

Technologically, it is important that WSU's system is reliable and that there are no false positives or negative responses. And here is the first challenge: how do you make an IT system that is not wrong? For example, one could imagine a student looking away from the screen to think, but the behavioral analysis will describe it as cheating if the student does not constantly look at the screen.

WSU's system will fall into the category of the high-risk AI system, as an automated behavioral analysis is used to assess whether the student is cheating on the exam. This can be a threat to the fundamental rights of the individual and in particular, the right to privacy. However, we have begun to move into a high-risk system because of the use of cameras and automated behavioral analysis (biometric data). From an ethical perspective, it also raises several questions about surveillance, and many may find it uncomfortable to be filmed in their own homes.

In addition, the WSU system will also play a crucial role in determining the outcome of a person's life (predictions, recommendations, or decisions that affect environments with which the AI system interacts). For example, if the system determines that the student has cheated on the exam, it will have major consequences for the individual. It may be that the exam has to be taken again or that the student is excluded from the study. Given the system's role, part will probably also be considered a high-risk AI system.

There will also be an additional requirement for transparency in how WSU's system arrived at a decision. At the same time, students must be informed that an AI system monitors them, how it works, and how it makes decisions.

If you were further thinking that you would use WSU's system to determine if they have passed an exam, it would also qualify as a high-risk AI system.

In the example, the university must comply with GDPR since they process sensitive personal information. The university will be the data controller, and WSU will be the data processor. At the same time, the university must ensure that WSU complies with the GDPR, that the data is processed lawfully, fairly, and transparently, and that students are informed about using their data.

General purpose systems in education

If you want to use ChatGPT, Google Bard, Midjourney, Dall-E, or other generative AI systems in education, there are several requirements that the provider must comply with.

Here, the user must know that it is an artificial intelligence they are interfering with. When generative AI, e.g., creates images or writes text, it must be marked that artificial intelligence has been used in the development. At the same time, the provider must also comply with transparency requirements as described above.

What does this mean for the use of artificial intelligence in education

As a starting point, the AI regulation means that there must be control over data and how data is used together with artificial intelligence. As we read the regulation, it is very much about protecting the citizens of the EU and having a set of ground rules everyone must follow.

As a teacher, it is important to remember which AI systems are and which are approved for use in teaching. One can expect developers to start adapting their artificial intelligence systems to the AI regulation; otherwise, they will be excluded from the European market. However, it is important that you still comply with GDPR and that, in the meantime, you do not uncritically send students to various tools online.

Schools must examine whether some of their current systems will fall into the four categories of Regulation and how they might be adapted. Here, it can be challenging if you have or want to develop high-risk systems. However, the actual fulfillment of the AI regulation lies with the developer, but it lies with several increased costs that make the systems more expensive. At the same time, schools also need to make it clear to teachers what materials they are allowed to use as part of teaching.

Although it will probably take 2-3 years to implement the regulation fully, you can already start getting ready.

If you want more knowledge about the new rules, we recommend the following course in the AI regulation:

Kilder

https://digst.dk/media/19302/national_strategi_for_kunstig_intelligens_final.pdf