We are nearing the end of 2024, and it’s time for this year’s final newsletter. This edition delves into current topics such as AI’s potential and challenges in education, the increasing use of generative AI in Denmark, and the need for clear guidelines for using AI technology.

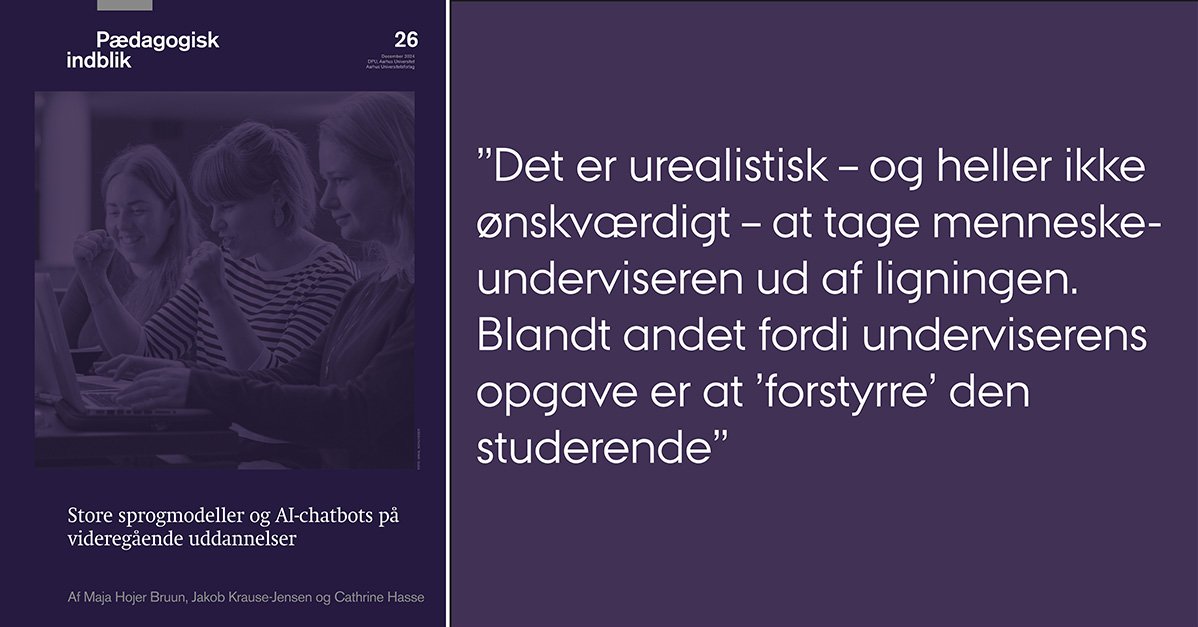

- A new report from DPU highlights AI’s potential as a teaching tool in higher education but emphasizes the importance of keeping human educators as central facilitators.

- A podcast featuring DPU experts discusses how AI can enhance learning when used as a reflective tool.

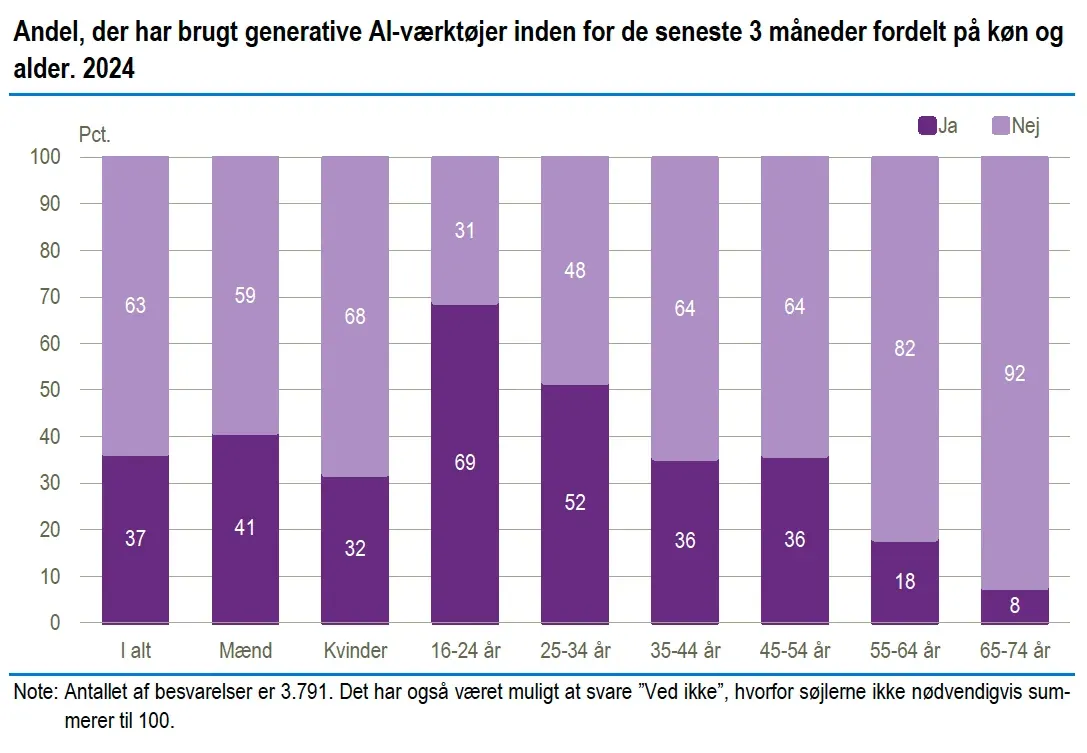

- A survey from Statistics Denmark: 37% of Danes use generative AI like ChatGPT and MidJourney, particularly among younger and highly educated demographics.

- A Swedish teacher criticizes the digitization and use of AI in schools, expressing concerns about its impact on teaching quality and educators' working conditions.

- An analysis shows that AI plays a more minor role in political misinformation than previously feared, recommending a shift in focus toward structural solutions.

- AI-generated deepfakes are being used for bullying in the US, prompting calls for clear guidelines and more comprehensive legislation.

- WisdomBot, a new language model for education, is designed to support deep learning based on Bloom’s taxonomy.

- + More news from the week

Happy reading, and we’ll be back stronger in 2025!

AI chatbots and large language models in higher education

A new research overview from DPU, Aarhus University, sheds light on AI's potential as a support tool in teaching and learning in higher education. The report addresses how AI can be used as a supplement to educators, e.g., to deliver quick and personalized feedback, but also emphasizes the need for human interaction to ensure deep learning.

The study shows that much of AI research focuses on individualized learning, where AI strengthens students' self-regulated learning. However, this contrasts with Nordic educational traditions that emphasize community and dialogue. The technology's limitations, such as the lack of contextual understanding and the risk of creating learning voids, are highlighted as critical points. The researchers point out that AI cannot replace the educator's role as a facilitator who disrupts and challenges the students' understanding and learning processes.

The report is based on 141 studies and discusses five main themes:

- Perspectives on learning theory - The lack of pedagogical research on AI's role in learning.

- Teacher bots as teaching assistants - AI's potential as a supplement to educators.

- The students' experiences - The risk of superficial learning and challenges with academic integrity.

- AI literacy and academic skills - There is a need to build critical reflection in using AI.

- Ethics and inequality - AI's bias and potential consequences for social inequalities.

The report highlights the need to develop clear pedagogical frameworks and strategies so that AI can be used to strengthen learning rather than replace the educator's role.

Pedagogical Insight 26: Large Language Models and AI Chatbots in Higher Education Research overviews from DPU

Listen to the podcast: Large language models and AI chatbots in higher education

The podcast explores the potential and challenges of integrating AI chatbots in higher education.

Cathrine Hasse and Maja Hojer Bruun from DPU discuss how AI can be a valuable learning resource if used correctly. Annette Pedersen, special consultant in e-learning at the University of Copenhagen, highlights the importance of pedagogical development that can help students use AI to strengthen their learning rather than deliver quick answers.

The focus is helping students develop learning strategies where AI is a reflective tool that challenges their understanding and promotes deeper learning.

IT Usage in the Population 2024

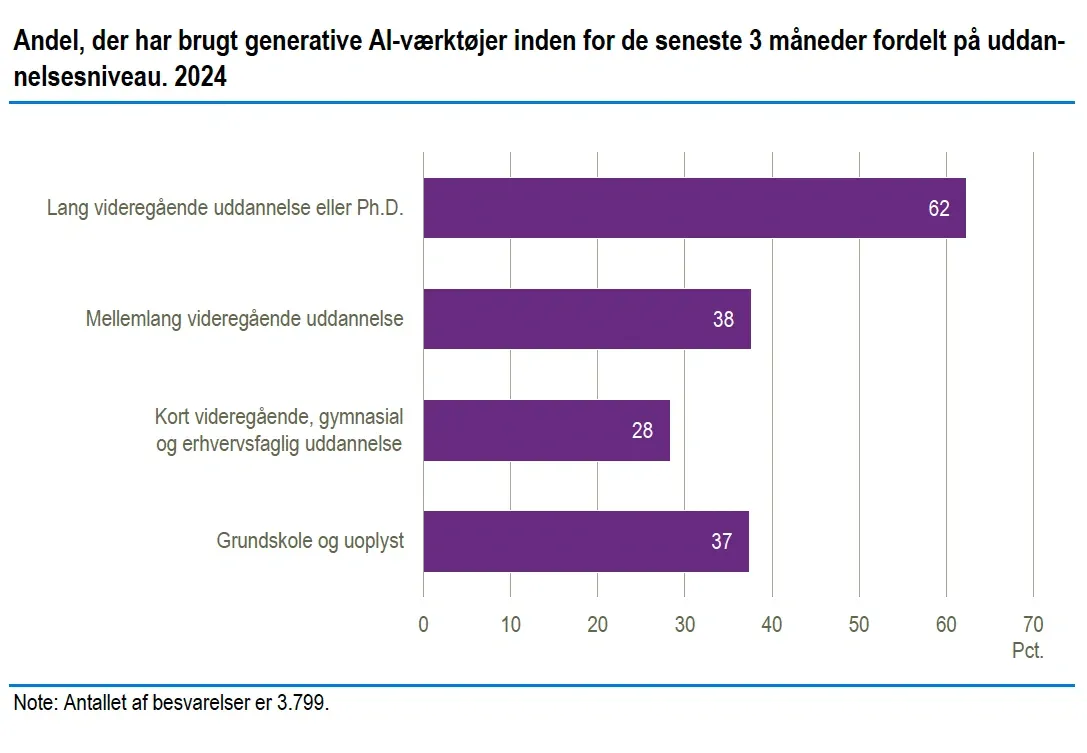

The report IT Usage in the Population 2024, published by Statistics Denmark, reveals that generative AI is becoming increasingly widespread in Denmark. 37% of the population uses technology, especially among young and highly educated people, where they are clearly overrepresented. Generative AI, which includes tools like ChatGPT and DALL-E, is mainly used for text, image generation, and coding.

Age and education:

- 69% of 16-24 year olds use AI, while only 8% of 65-74 year olds do.

- 62% of people with extended higher education use AI, while only 28% of those with shorter education do.

Kilde: It-anvendelse i befolkningen

Purpose of use:

- 61% use AI privately, e.g., to create content or find inspiration.

- 49% use AI professionally, especially in creative or technical professions.

- 38% use AI for education, e.g., to write assignments or understand complex topics.

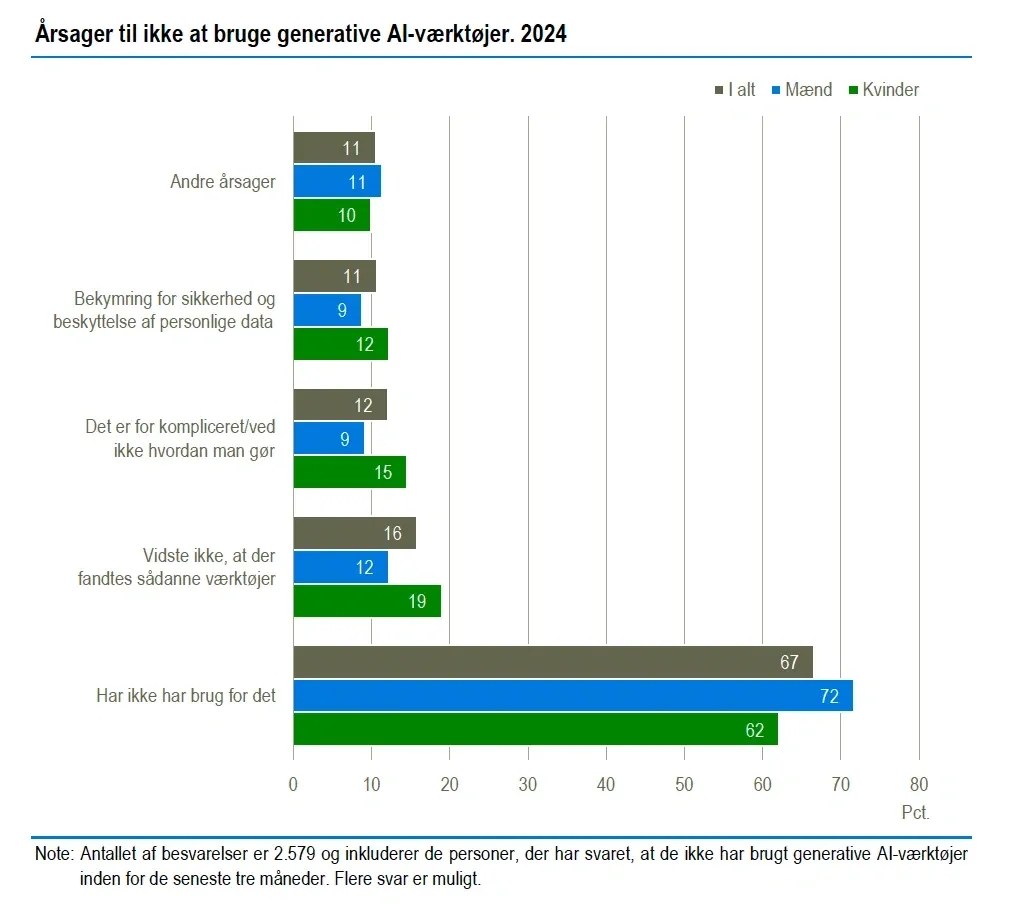

Barriers to use:

Among those who do not use AI, 67% say they do not need it, while 19% do not know such tools exist. Security and data protection are also mentioned as concerns among some users.

The report highlights AI's potential to simplify tasks, support learning, and promote creativity. However, it also points out the need to bridge knowledge gaps and address security concerns.

Swedish teacher criticizes AI in schools

The Swedish teacher Rickard Öhman sharply criticizes the union Saco for collaborating with the business community to promote AI in primary schools without involving teachers' perspectives. He fears that digitalization and AI may worsen both teachers' working conditions and the quality of teaching.

Öhman, who teaches philosophy and history, points out that previous school digitalization projects - such as experiments with digital national tests and the spread of one-to-one technology - have often created more problems than solutions. These initiatives have, among other things, increased teachers' administrative burdens and led to technical difficulties in the classroom without strengthening students' learning. Öhman emphasizes that AI is neither necessary for good teaching nor students' future career opportunities. He calls for a more cautious approach and points out that traditional teaching and teachers' professional judgment remain the best foundation for a strong school.

AI’s Role in Misinformation During Elections: Focus Should Shift from Technology to Societal Factors

During the 2024 election campaigns, artificial intelligence was often highlighted as a source of political misinformation. However, a new analysis from the WIRED AI Elections Project paints a more nuanced picture. The study examined 78 cases of AI use, and in more than half of these, AI was not used for deception. Instead, AI was employed for legitimate purposes, such as producing campaign materials and improving the quality of information.

When AI was used for deception, it could easily have been achieved without AI. Traditional methods like Photoshop, simple video alterations, or “cheap fakes,” were used instead. For instance, videos were manipulated to make it appear as if a politician had said the opposite of their actual statements. These inexpensive techniques were employed far more frequently than AI-generated misinformation in the U.S. election alone—seven times as often.

The analysis concludes that focusing on AI as the primary cause of misinformation is misleading. While AI lowers the cost of producing false content, the real issue lies not in how the content is disseminated but in the demand for misinformation. This demand is driven by political polarization and entrenched worldviews. Recipients of misinformation are often already aligned with the message and, therefore, less critical of its credibility.

Seen in this light, AI misinformation plays a very different role from its popular depiction of swaying voters in elections. Increasing the supply of misinformation does not meaningfully change the dynamics of the demand for misinformation since the increased supply is competing for the same eyeballs. Sayash Kapoor og Arvind Narayanan, AI Snake Oil

Historically, new technologies such as photo and video editing have also sparked similar fears of misinformation. However, the solution has never been technological. Instead, the analysis points to the need for structural and institutional changes. For instance, strengthening journalism and building democratic trust are necessary measures.

Deepfakes og AI-mobning skaber udfordringer for skoler i USA

In the United States, schools are facing increasing issues with AI-generated deepfakes used for bullying and harassment. According to an article from Education Week, examples include 14-year-old students creating pornographic images of classmates and a school staff member producing a fake audio file to discredit a principal. These incidents have led to social conflicts, legal consequences, and demands for better guidelines.

A study by the EdWeek Research Center reveals that 67% of school leaders believe deepfakes have misled students. Yet, only 7% of teachers and administrators have received adequate training to address the issue. Several schools, including those in New York and California, have updated their behavior policies to classify deepfakes as a form of cyberbullying.

The article also highlights the need for national legislation, such as the proposed Defiance Act, which would assist victims and allow for legal action against creators of harmful deepfakes.

In Denmark, similar legislation to the Defiance Act could expand existing rules against digital violations to specifically include AI-generated deepfakes, safeguarding individuals’ digital rights and integrity.

Nørdehjørnet

WisdomBot: A Language Model Tailored for Educational Use

A new article introduces WisdomBot, a large language model (LLM) specifically developed for educational use. WisdomBot supports basic and advanced learning, using Bloom’s taxonomy as its framework. The model employs retrieval-based methods, drawing on local knowledge bases and search engines to provide accurate and up-to-date answers.

WisdomBot has been tested in various educational scenarios and outperforms other Chinese language models, such as Chinese-LLaMA and Qwen, in tasks like answering subject-specific questions, creating assignments, and providing guidance. Based on textbook data and self-instruction, the model has demonstrated strong results in science, technology, and social sciences.

Read the article here:

This Week’s Other News

Bag betalingsmur