ChatGPT becomes multimodal

Just before last week's newsletter's deadline, OpenAI launched an app version of ChatGPT with built-in speech and image recognition features. In the application, which is available for both iOS and Android, they have initially made it possible to make speech-to-text and subsequently have the answer read aloud. Thus, one can begin to have a conversation with the language model. At the same time, they have announced that they are starting to roll out image recognition in the model, and thus, it will be possible to combine images and speech in one prompt.

Several examples have emerged showing the new technology, and Mckay Wrigley shows below how students could use image recognition:

Kilde: https://x.com/_borriss_/status/1707412411836162279?s=46

The new multimodal options can thus extend traditional learning resources and adapt the content to the individual. In the long term, images and illustrations will not be static but will become more interactive and engaging for students.

On Viden.AI, we've previously written about MiniGPT-4, which can also be used as an image analysis tool, and how Bing and Bart can be used for visual searches. On the face of it, the new features in ChatGPT seem to be ahead of the competition, and we hope to gain access soon.

ChatGPT can once again search the web

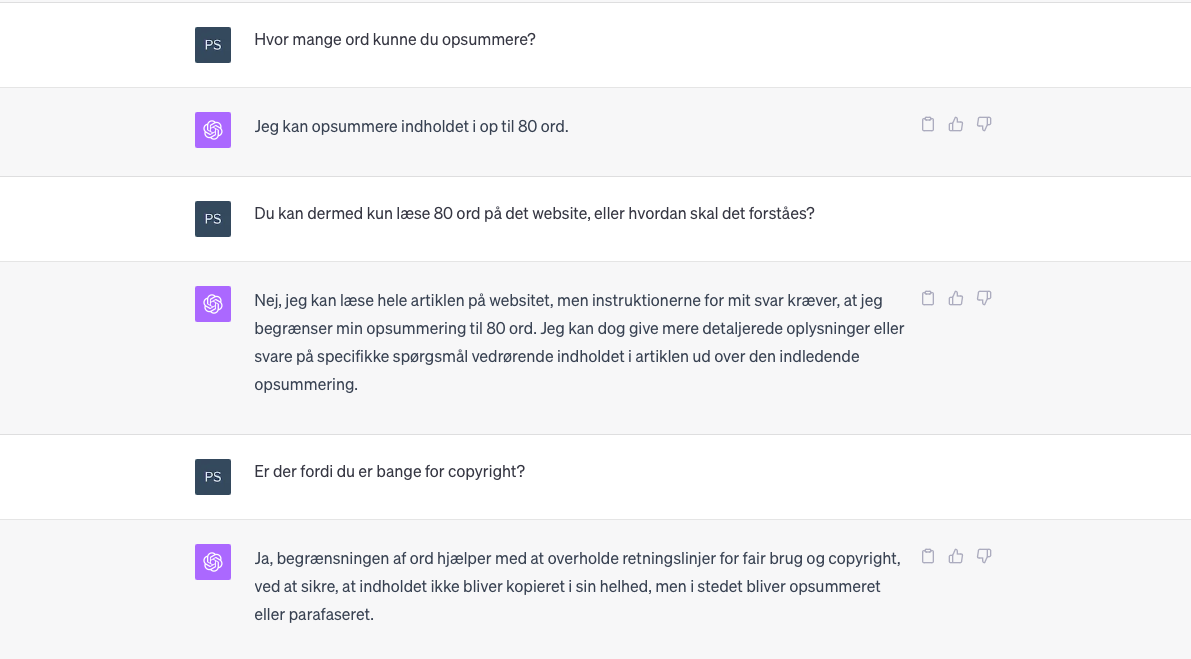

Paid customers have also been given access to ChatGPT with Bing Search, allowing them to find up-to-date information from the web again. OpenAI had shut down the technology as it was too good at getting behind a series of paywalls and downloading entire articles.

We have tested the feature ourselves, but it is minimal, as you can only get summaries of 80 words (sometimes it says 100 words, though).

The Decoder has investigated the possibilities of searching the web and writing an article whose central message is that traditional search engines are more valuable and accurate. The challenge is that ChatGPT rewrites the information, misses the point, or misses essential aspects of the text, whereas search engines display relevant snippets directly from the source. However, search engines also have problems such as spam, misinformation, and pages not being transparent.

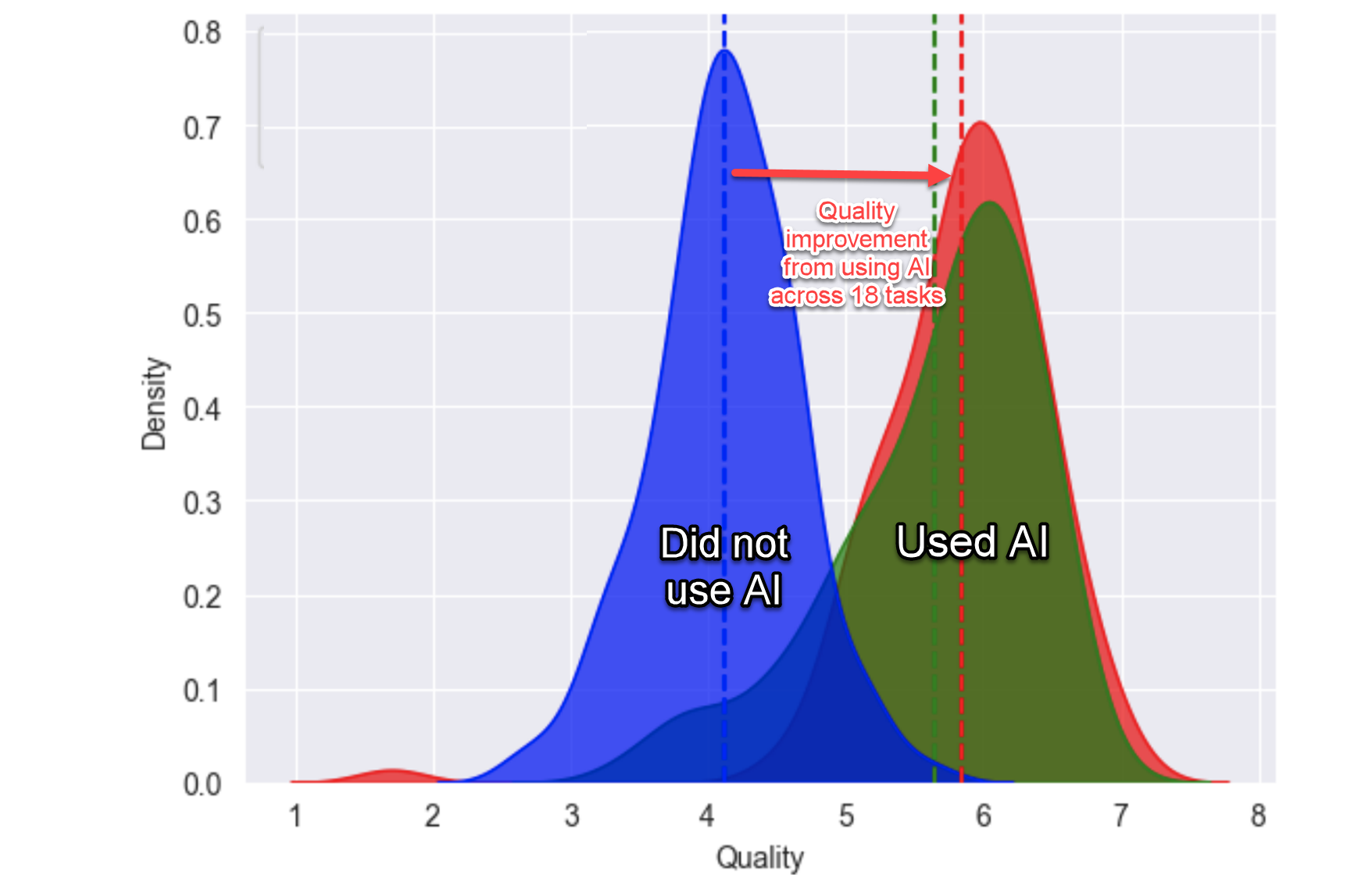

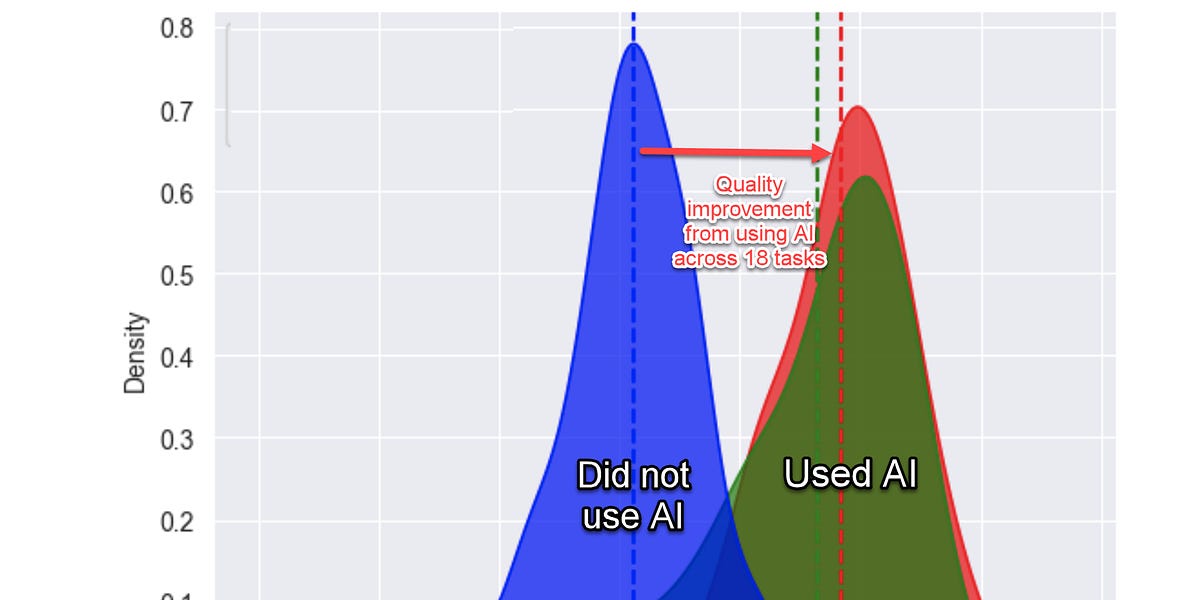

A new study shows that GPT-4 can pose a significant skill equalizer

The Boston Consulting Group conducted the study conducted the study along with several academic researchers, including Ethan Mollick. In the study, the skills of several consultants were assessed, and here, they were divided into two groups based on their performance before using AI. The consultants, who initially performed below average (the bottom half of the group), saw a marked improvement in their work with AI, increasing the quality of their results by as much as 43%.

On the other hand, consultants who initially performed above average (the top half of the group) also improved with the help of AI, although to a lesser extent compared to those who served lower. For this group, the quality of their results improved by 17% after using GPT-4, which is considerably lower than the 43% improvement rate achieved by the bottom half.

We've seen similar studies in education, where the worst-performing group also benefited the most from having ChatGPT.

Below, we have gathered a few links about the study:

A new article on viden.ai

When writing and talking about bias in language models like ChatGPT, it's usually about the mismatch between what the model writes and what we would like it to write (the ideal world in the eyes of the individual?). In reality, it's more about our expectations regarding the data used to train the models.

Claus has written an article about bias, or bias, as he prefers to call them, in large language models. In the article, he looks, among other things, at why and how it occurs. He also discusses potential biases in Google searches and also rounds up the biases or biases we all have.

As educators, we must enlighten and educate our young people. It is essential that we make future generations critically thinking and reflective people and that they learn to use the new technologies on an informed basis. Informing and teaching about the potential biases of technologies, the need for critical thinking, validation of information, and healthy skepticism might be a good place to start! In any case, we must be very conscious of what to expect from language models when we use them!

Andreas Lindenskov Tamborg, assistant professor from the University of Copenhagen, wrote on LinkedIn:

This is a strong post and an essential contribution to sharpening the precision of what we mean by and understand by bias. As time stands, this should be part of core material in both primary and secondary schools.

Read the article here:

News

Recommendation of the week

Teachers' Room is a podcast about teaching created by Morten Gundel, who teaches social studies and communication & IT at H.C. Ørsted Gymnasium in Lyngby. Through conversations with researchers, teachers, and students, he tries to learn more about some pedagogical and didactic challenges he faces daily as a high school teacher.

This week, Viden.AI stopped by to chat with Morten about what we've learned about artificial intelligence in education after a year with ChatGPT. Listen to the new episode and dive into the other fascinating episodes.