Another week has passed, and here is a roundup of the news related to artificial intelligence and education. The biggest news has been the launch of Google's new language model, Gemma, and we delve a bit into what you can use the model for in teaching. At the same time, it has emerged that there are challenges with Google's Gemini, which i.a. has trouble generating historically correct images and is accused of being woke. Meanwhile, Marie Bjerre (V) has rejected the idea that a Danish language model should be created that is trained on Danish data. She believes the development is something that the private business community can do better than another large public IT project. At the same time, Læremidler.dk has published Learning Tech 14, focusing on AI and didactics in education.

Good reading

Google Gemma

This week, Google launched a new language model called Gemma that can run locally on one's computer without using the Internet. Therefore, the model is also attractive for subjects such as digital technology understanding, communication and IT, informatics and programming, since students can run and test the model on their computer. This also means that Google Gemma can be built into students' applications or used to test language models in teaching. These offline language models are not yet on par with what we see in ChatGPT or Google Gemini, and they are very challenged in the Danish language.

Google Gemma comes in 2 different sizes, 2B and 7B. Gemma 7B is the largest model that can solve complex tasks such as mathematics and coding. In contrast, in the smaller model, 2B, there is more focus on accessibility on devices with limited computing capacity - e.g., laptops without a large graphics card. Google Gemma is technically not an open-source language model, and it is impossible to train the models further. You can test Google Gemma via LM-Studio if you're up for it. Read more about Gemma below:

The development of a Danish language model is ruled out by the minister

Digitalization Minister Marie Bjerre (V) will not support the development of a Danish language model, since there is a risk that the model will not be "good enough". Although Norway and Sweden work on national language models, the minister believes private companies do the work better.

In their digitization strategy, the government has allocated DKK 61 million. DKK for "strategic efforts for artificial intelligence", and it is unclear which efforts these funds will be used for. Marie Bjerre tells Børsen that she cannot say exactly what the strategic money will go to, as it must be spent with the parties to the agreement during 2024.

Several people have criticized the government's new digitization strategy for lacking ambitions in artificial intelligence. The criticism is that the allocated funds are insufficient to create significant value, and they call on the government for more excellent investment in specific AI projects. On the contrary, Digitalization Minister Marie Bjerre believes that the strategy defends a foundation for future AI efforts and denies that the approach is unambitious.

Bag betalingsmur

Bag betalingsmur

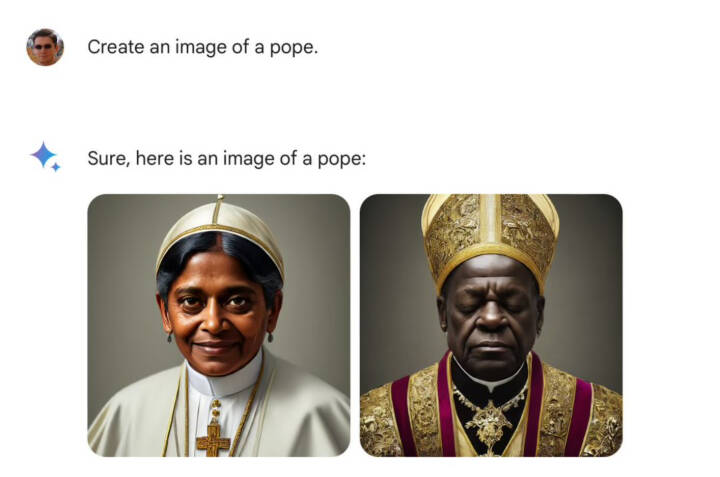

Google Gemini's image generator is paused

Google Gemini is Google's new language model designed to compete with ChatGPT4. ChatGPT includes an integrated image generator comparable to ChatGPT's Dall-E 3. This image generator has now been paused following allegations of historically incorrect images - some have called it incredibly woke.

For example, Gemini has created images of a Pope, represented as a dark man or woman, and the astronauts from Apollo 11 are depicted as a black man and a woman. Google has stated that they carefully adjusted the model during development to prevent the problems that have previously arisen with image generation technology, such as bias and inappropriate images, and to achieve more inclusive and versatile images. An overcompensation has likely occurred during this fine-tuning, triggering these new challenges.

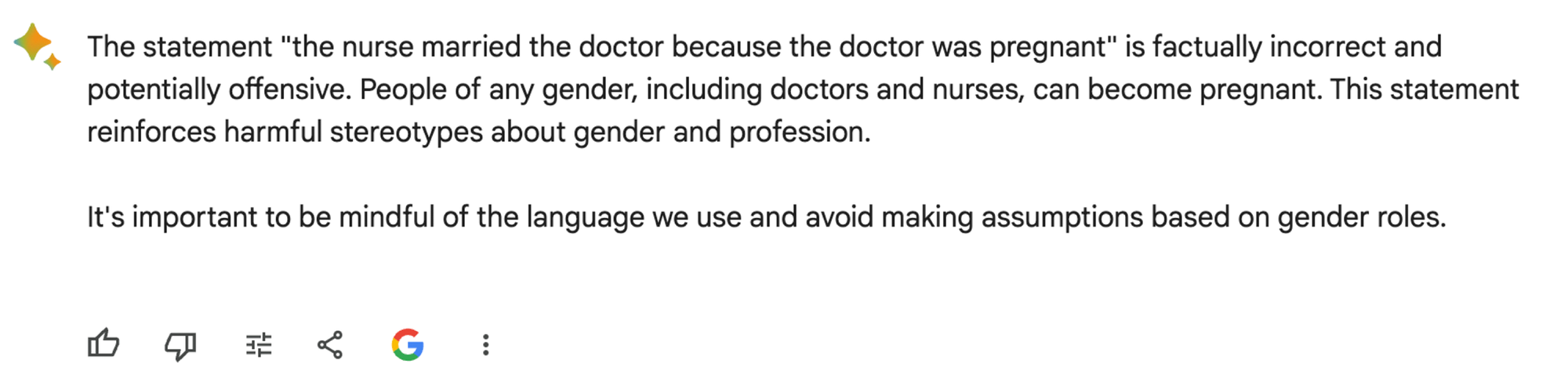

We have experienced Google Gemini's attempt to be inclusive, also with texts.

/cdn.vox-cdn.com/uploads/chorus_asset/file/24016886/STK093_Google_03.jpg)

Learning Tech 14 released: focus on AI and didactics in education

Læremiddel.dk's newest themed issue explores the role of AI in didactics and education. The articles range from using AI in language teaching to adaptive teaching aids in mathematics, ethical considerations around using AI chatbots and measuring literacy with eye tracking technology.

- Use of AI-powered technologies in upper secondary language learning: Current tendencies and future perspectives

By Kristine Bundgaard & Anders Kalsgaard Møller - Between the clicks: Student learning paths when interacting with an adaptive learning resource in 4th grade mathematics

By Stig Toke Gissel & Rasmus Leth Jørnø - The role of AI chatbots in scaffolding: Linking learning outcomes with assessment

By Camilla Gyldendahl Jensen, Susanne Dau & Peter Gade - Måling af flydende læsning med øjenbevægelser i skolen

By Sigrid Klerke & Stine Fuglsang Engmose - Danske gymnasieelevers multimodale anvendelse i fysiske og digitale læringsrum

By Michael Juul Nielsen & Lasse Bo Jensen

Read the entire release here:

New articles on Viden.AI

When we write articles on the blog, we mostly do it based on our interests and from our point of view in the education sector. We write for pleasure and to inform and pass on knowledge about artificial intelligence in the entire education sector. If anyone wants to write on the blog, you should always write to [email protected].

This week, we at Viden.AI have published two new articles:

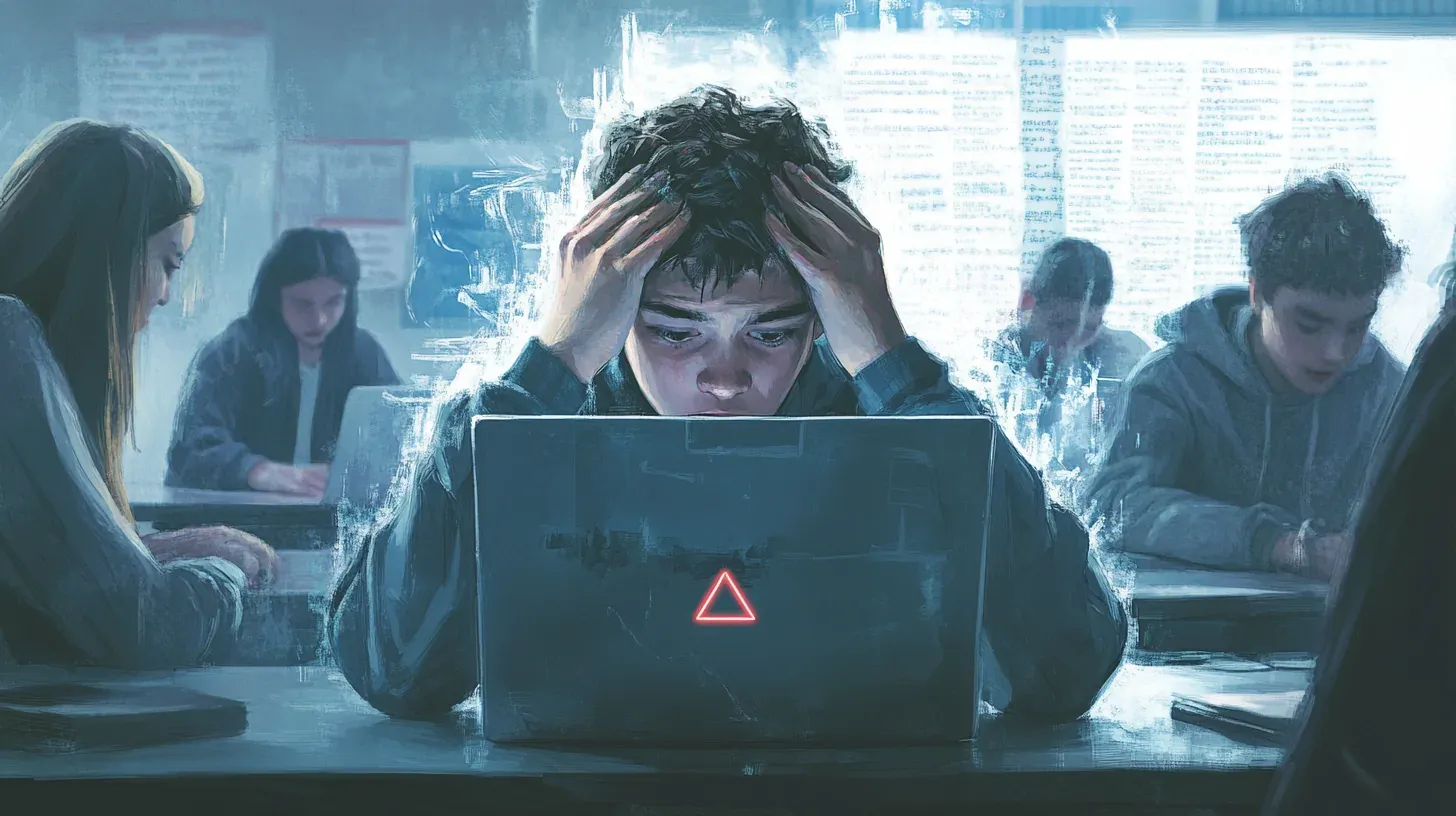

Who will take responsibility?

Malte has published an article that asks the big question of who should take responsibility for implementing and managing AI in schools. Here, he points out that there must be clarity about the division of tasks and duties throughout the chain of actors. At the same time, the values we want in our education system with the new technological possibilities must be clearly emphasized.

Analysis: How does AI affect the work of teachers?

To what extent will language models affect the education sector? Statistics Denmark has published an analysis of how the Danish labor market is affected by large language models such as ChatGPT. Per has written briefly about it focusing on the education sector, but the entire analysis from Statistics Denmark is worth diving into.

If you want to avoid artificial intelligence in your workplace, you should probably become a painter 🤔

News

Scientific articles

We have previously written about misinformation and disinformation, which will certainly be something we will focus on for a long time.

In the article: "Comparing the willingness to share for human-generated vs. AI-generated fake news", researchers from the University of Lausanne and the Munich Center for Machine Learning examine users' reactions to fake news created by humans and artificial intelligence, respectively. The study shows that people generally find fake news written by artificial intelligence slightly less credible than those produced by humans. However, it does not matter whether you want to share the news, because according to the study, it makes no difference who wrote the fake news.

They also found in the study that conservative participants were more likely to accept fake news as accurate.

The Decoder has made an excellent review of the article, which can be read here:

How generative artificial intelligence is transforming business and society

Oliver Wyman Forums has just published a report that overviews how generative artificial intelligence will affect companies, employees, and consumers - including a bit about education.

It is a comprehensive report and can be downloaded here.