Welcome to this week's delayed newsletter. Our main story is that the first rules of the EU AI regulation have now come into effect. This also means that employees at educational institutions working with AI need to have a solid understanding of the technology. Are all educational institutions ready for that?

It is also striking that the Data Protection Agency has chosen to deactivate Microsoft's AI service Copilot due to concerns over data protection and lack of transparency. This could have significant implications for how we work with AI in schools if Copilot is excluded. Jeppe Stricker writes in his latest newsletter about digital inequality and the challenges that come with dependence on large tech companies.

Last week, we published two new articles on Viden.AI:

- DeepSeek R1 has GDPR challenges, making it problematic to use in teaching.

- We have investigated how small AI learning objects can support teaching in communication and IT, including logo design.

In addition, we have chosen to focus on the following news stories in this newsletter:

- Videnskab.dk offers good advice on how students can use ChatGPT without compromising their learning and understanding.

- New research shows that AI does not eliminate jobs but changes demand.

- OpenAI has launched Deep Research, a new ChatGPT feature that can perform advanced research autonomously with citations and visual elements.

- A new study from Aarhus University shows that Danish researchers disagree on ChatGPT’s role in research – ranging from a useful tool to a limited technology.

- P1 Debat examines whether Denmark should invest in its own AI development or rely on international tech giants.

- AI author Rosy Lett challenges the idea of literature as a human art form – what does it mean for the future of authorship?

- + This week's other news

We will be closed for winter vacation in week 7 but will return with the newsletter again on Monday in week 8.

Happy reading with this newsletter!

The first rules of the EU AI regulation come into effect

As of February 2, 2025, companies and authorities that develop, distribute, or use AI must comply with the first rules of the EU AI regulation. The purpose of these rules is to ensure that AI does not pose a threat to security, civil rights, or human dignity.

The regulation includes, among other things, a ban on certain AI applications, including systems that read emotional states in workplaces or educational institutions, as well as AI for social scoring of citizens, as these technologies can lead to discrimination and exclusion.

Additionally, companies and authorities working with artificial intelligence must ensure that their employees possess a sufficient level of AI skills among their staff or other individuals involved in the operation or use of AI. This applies regardless of what the AI systems are used for. All relevant actors in the AI value chain must have the skills to understand and comply with the AI regulation where it is applicable.

This is particularly important for teachers who must teach students about AI and use AI systems in their teaching. Teachers need to have a solid understanding of:

- How AI works and what opportunities and limitations the technology has.

- The ethical considerations and potential risks associated with the use of AI, including the risk of bias, discrimination, and invasion of privacy.

- The legal frameworks for the use of AI, including the rules and requirements of the AI regulation.

- How to teach students to use AI responsibly and appropriately.

Schools should therefore prioritize training and professional development for teachers in the field of AI. This could be through courses, workshops, or collaboration with experts. Teachers must be equipped to navigate the new AI landscape and guide their students.

At the same time, it is important for schools to have clear guidelines and policies for the use of AI in teaching that are in accordance with the AI regulation. Schools should continuously assess and monitor their use of AI to ensure that it is responsible and does not violate students' rights.

The AI regulation was adopted on August 1, 2024, and will be gradually implemented until 2027. Companies are advised to create an overview of their AI systems and assess which risk category their technology belongs to.

The Data Protection Agency warns against Microsoft Copilot due to GDPR challenges

The Data Protection Agency has chosen to deactivate Microsoft's AI service Copilot throughout the organization due to concerns over data protection and lack of transparency. Copilot collects personal data, but Microsoft does not provide sufficient insight into how this data is processed and stored.

This makes it difficult to assess whether the service complies with GDPR legislation. Issues with Microsoft's handling of personal data are not new. The City of Copenhagen has previously acknowledged continuing its illegal IT contracts, as their systems are crucial for welfare benefits.

In the Netherlands, educational institutions have advised against using Copilot for the same reasons, while the Norwegian Data Protection Authority has pointed out the security risks associated with the service's access to data in the Microsoft 365 environment. The Data Protection Agency in Denmark agrees with Norway that Copilot, as currently designed, cannot be used by public authorities without significant uncertainties.

Organizations are therefore encouraged to gain a full overview of data processing before implementing the system – or refrain from using it.

AI, data security, and democratic challenges in the education sector

In his newsletter, Jeppe Stricker writes about how AI creates complex democratic dilemmas in the education sector. The Data Protection Agency has refused to use Microsoft Copilot due to concerns over data security, while the Chinese AI provider DeepSeek has launched a free language model that raises questions about transparency, censorship, and economic accessibility.

These issues could lead to a new form of digital inequality, where only wealthy institutions have access to transparent and secure AI solutions. Without a comprehensive political strategy, institutions are left to navigate a complex technological landscape without the necessary support.

DeepSeek: Potential and challenges in education

At Viden AI, we have published an article about DeepSeek R1, an open-source language model from the Chinese company DeepSeek, which has quickly gained recognition for matching the level of ChatGPT. The model has garnered significant interest, among other reasons because it can be downloaded and customized freely, providing schools and educators with new opportunities for working with AI in a controlled environment.

However, DeepSeek collects large amounts of user data, and since it is stored on servers in China, this raises serious GDPR challenges.

The Italian data protection authority has already asked DeepSeek to account for its handling of personal data, underscoring that the service is unsuitable for use in education via its website or app.

Logo design with AI learning objects

At Viden AI, we have published an article focusing on the subject of communication and IT, where we have developed and tested AI learning objects as part of our teaching. Instead of using ChatGPT, we have developed small AI-based learning resources that support specific parts of the teaching process and assist students in their creative process.

The idea is to use AI when it makes sense and adds value, and to utilize very specific and specialized tools in teaching.

Read the article here:

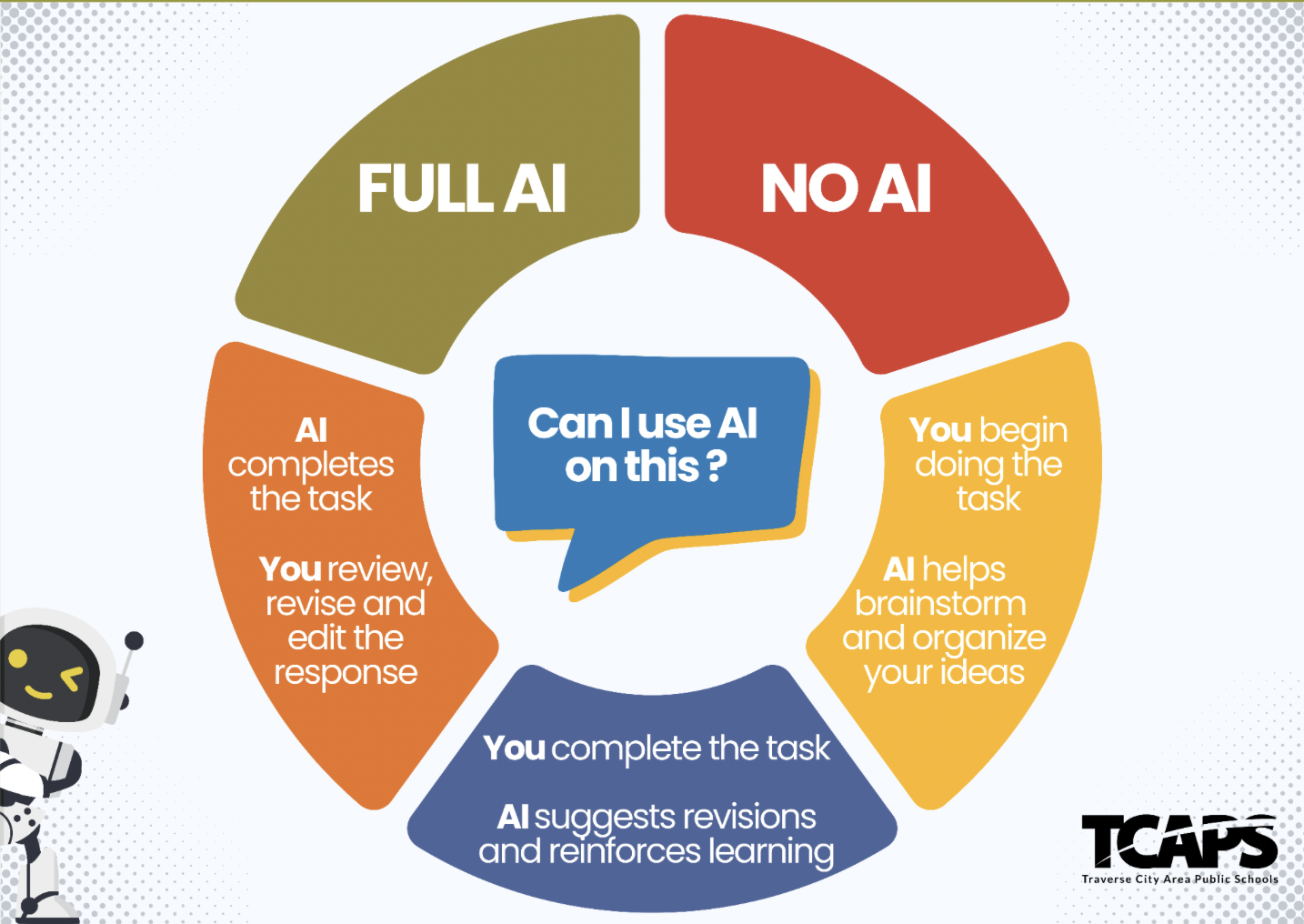

Videnskab.dk: How to use ChatGPT without losing educational value

Videnskab.dk has written an article on how students can use ChatGPT without compromising their learning. AI can be a useful tool, but if used uncritically, students risk losing the opportunity to develop a deep understanding.

Read the article here:

AI changes job demand in the labor market

A new study shows that AI has a significant impact on the labor market.

In certain areas, such as translation and copywriting, demand has fallen sharply since the launch of ChatGPT. At the same time, there is increasing demand for skills in machine learning, chatbot development, and creative content.

Researchers from, among others, the University of Copenhagen have analyzed over three million freelance jobs and concluded that AI does not remove jobs in general, but redistributes them. Short-term and routine tasks are most at risk, while more complex and creative tasks are experiencing growth.

The study suggests that the labor market requires greater flexibility and that the education system should focus on broad competencies such as adaptability and curiosity.

OpenAI launches Deep Research

OpenAI has introduced a new feature for ChatGPT called Deep Research, which can perform complex, multi-step research tasks autonomously. The feature plans and executes searches, adapts to real-time information, and presents results with citations in a sidebar.

Users can ask questions using text, images, and files, such as PDFs and spreadsheets. The responses, which can take 5-30 minutes to generate, will also be able to include embedded images and diagrams in the future.

The feature will launch first for Pro users with up to 100 queries per month, while Plus, Team, and Enterprise users will have limited access.

Study: Danish researchers are divided in their use of ChatGPT

A new study from Aarhus University shows that Danish researchers are divided in their use and perception of ChatGPT. While some see AI as a revolutionary research tool, others find it limited or problematic.

The historian Benjamin Breen has tested ChatGPT for transcription, translation, and iconographic analysis of historical sources – with surprisingly good results. However, he points out that AI primarily produces analyses at the master's or PhD level and lacks genuine innovation.

In biomedicine, AI has made breakthroughs in protein research, while humanities researchers mainly use ChatGPT for text editing and formatting. Archaeologist David Stott is skeptical and believes AI can only reproduce existing knowledge, limiting its usefulness in his field.

The study shows that 40% of researchers use ChatGPT as a sounding board for hypothesis development, while only 8.5% use it to write abstracts. Literature professor Mads Rosendahl Thomsen sees potential in AI as a reflection tool but emphasizes the importance of transparency in academic work.

P1 Debate: A Danish ChatGPT?

In P1 Debat, the question is discussed whether Denmark should invest in developing its own AI chatbots or leave the market to major tech giants and foreign technologies.

Panel:

- Lisbeth Bech-Nielsen, digitalization spokesperson, SF.

- Torben Blach, project manager, Alexandra Institute.

- Dina Raabjerg, digitalization spokesperson, Conservatives.

- Martin Ågerup, debater and economist, former director of the think tank Cepos.

- Anders Søgaard, professor, University of Copenhagen, expert in artificial intelligence and language.

- Birgitte Vind, digitalization spokesperson, Social Democrats.

Host: Morten Runge.

Listen to the debate here:

AI author debuts: What does it mean for literature?

In the P1 program K-Live, the remarkable debut of AI author Rosy Lett, who has written a novel without human intervention, is discussed. This raises the question: How much human influence is actually required to create good literature?

The panel explores whether AI can replace human creativity and how this will affect the future of literature.

This week's other news

This article has been machine-translated into English. Therefore, there may be nuances or errors in the content. The Danish version is always up-to-date and accurate.