In this week's newsletter, the focus is on the fact that students from DTU will in the future work with artificial intelligence as part of the teaching and for use in exams. At the same time, Italians are nervous about ChatGPT and believe that OpenAI collects the users' data and subsequently trains their language model on these (and thus violates the GDPR). They have therefore asked OpenAI to explain this within 30 days.

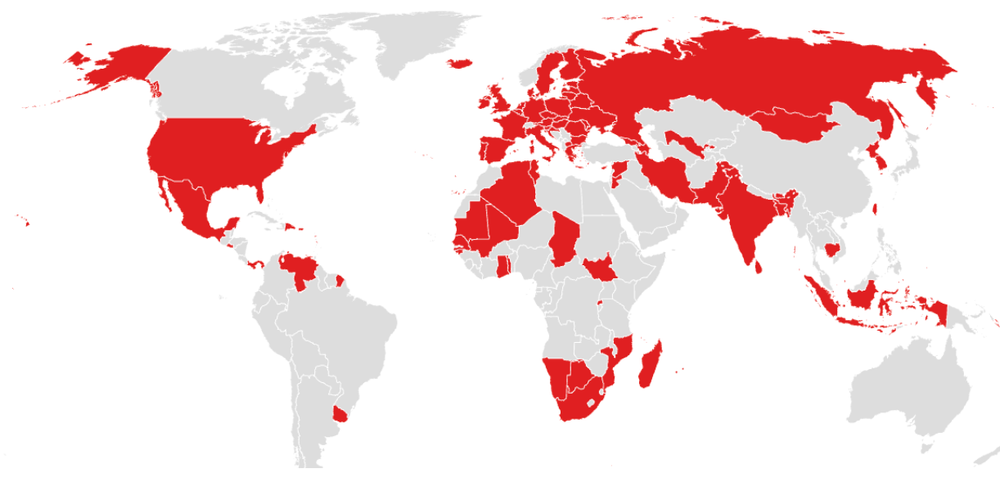

On Thursday, we also published an article about misinformation and disinformation, with a fear of how it will affect several upcoming elections around the world.

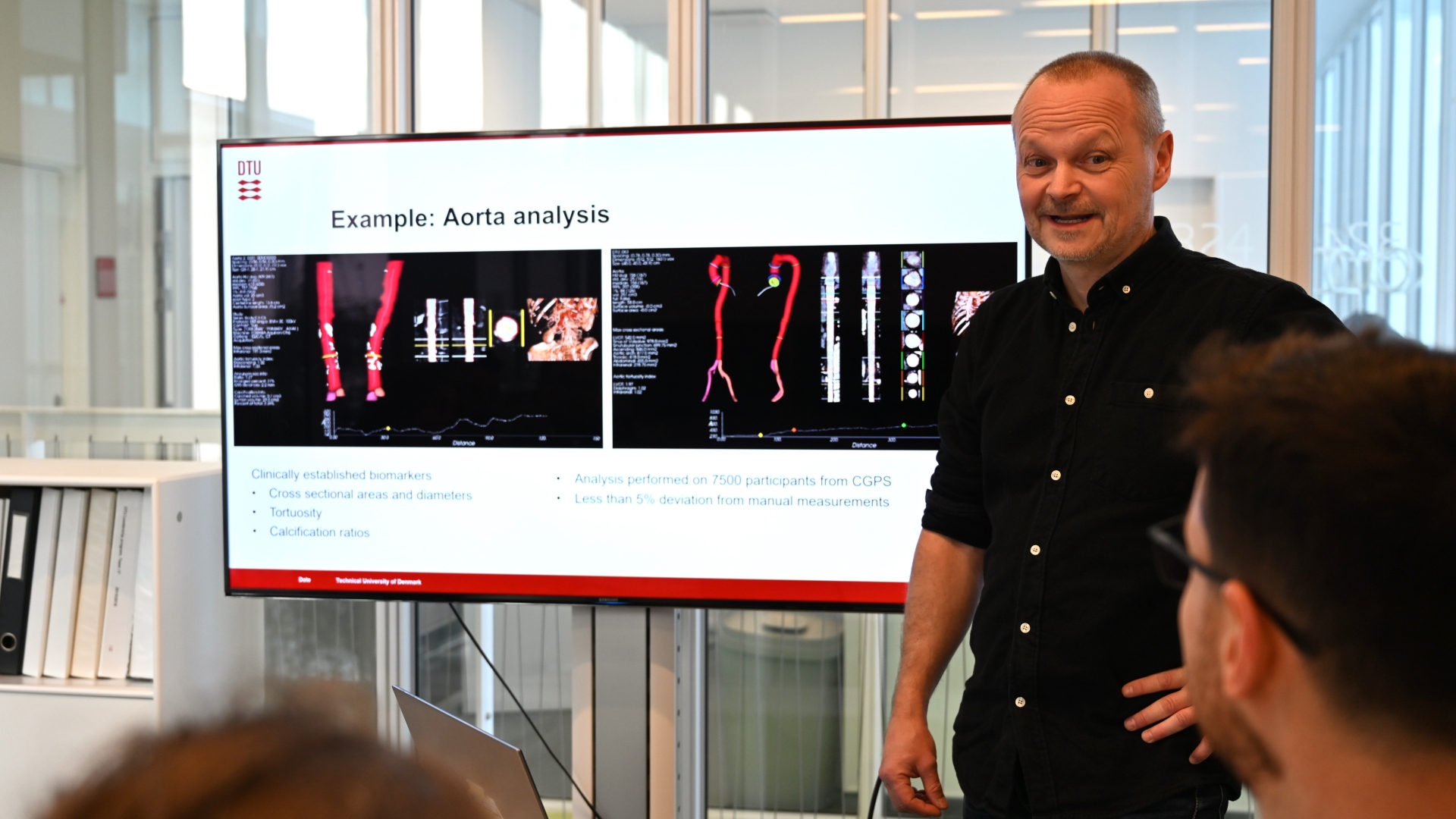

DTU is opening up artificial intelligence in teaching

As the first university, the Technical University of Denmark (DTU) has announced that artificial intelligence will be integrated into teaching and examination processes in 2024. This also includes language models such as ChatGPT.

The university writes that it is happening as part of an objective to utilize natural science and technical science for the benefit of society and that they want to offer leading engineering programs in Europe. They will adapt teaching methods and exam questions and use artificial intelligence more systematically in courses and research.

The university has developed guidelines for the ethical use of AI, focusing on academic integrity. These guidelines encourage students to use AI responsibly, including proper submission attribution.

Guidelines for the use of AI

It must be clearly stated if something in a delivery is not your product. If you do not do this, it is considered exam cheating, cf. DTU's honor code, rules for good academic practice, and exam cheating.

1. AI can generate output that is inaccurate or wrong. AI- is only an aid.

2. You are the guarantor of the quality of your work and that what you deliver is correct. AI-generated output may contain copyrighted material without it appearing.

3. It is your responsibility to ensure you do not infringe copyright. AI can be biased and trained based on particular attitudes.

4. You must therefore always be critical of its output. AI recycles the information you feed in. Therefore, you must avoid giving sensitive information.

5. The exam with 'All aids, but no internet access' means you may not use AI.

DTU adheres to the scientific publisher Elsevier's guidelines for the use of AI.

Italy's Data Protection Authority claims that ChatGPT violates the GDPR

Italy's Data Protection Authority, Garante per la protezione dei dati personali, has officially notified OpenAI that it has found violations of the Data Protection Act. Last year, ChatGPT was blocked for four weeks because the regulator had privacy concerns, something OpenAI was "fixed or clarified". In this connection, the supervisory authority set up a "fact-finding activity", which now claims to have found violations of the GDPR.

According to the BBC, the breach relates to the mass collection of users' data, which is then used to train the algorithm. At the same time, they are also concerned that younger users may be exposed to inappropriate content.

OpenAI has 30 days to respond to these accusations and explain their side of the story.

In its final decision on the case, the Italian Data Protection Authority will consider the ongoing work within the particular working group established by the European Data Protection Board (EDPB).

New articles on Viden.AI: Disinformation

In 2024, at least 64 democratic elections will be held worldwide, but how will AI-generated texts and deepfakes affect these elections?

We investigated this in a short article and during our research we discovered a website designed to generate and spread disinformation for only $400 per month. We have been in contact with the creators of this site, who have given us essential insight into this problem and raised a serious question: How many similar systems already exist out there, and what impact do they have on all the world's democracies?

We're surprised this topic isn't getting more attention. Perhaps the general population has not yet realized that artificial intelligence can be - and probably already is - abused.

The solution to meet this challenge is to educate young people (indeed the entire population!) in understanding digital technology. How else can they critically navigate the digitized world we live in?

News of the week

Bag betalingsmur

Bag betalingsmur

The geek corner

Meta launches Code Llama 70B

Meta has just released their language model, which focuses on coding, and it has 70 billion parameters. Meta calls it "the largest and best performing model in the Code Llama family". Before downloading it, you should know that it takes up 131 GB and requires a powerful GPU and a lot of RAM to run.

EvalPlus is an evaluation tool for testing code generation of large language models, and here, Code Llama scores 70B (65.2), which is quite a bit lower than GPT-4 (85.4).

You can test the model here without logging in: